https://en.wikipedia.org/wiki/Operating_system#History

https://en.wikipedia.org/wiki/History_of_operating_systems

https://en.wikipedia.org/wiki/Timeline_of_operating_systems

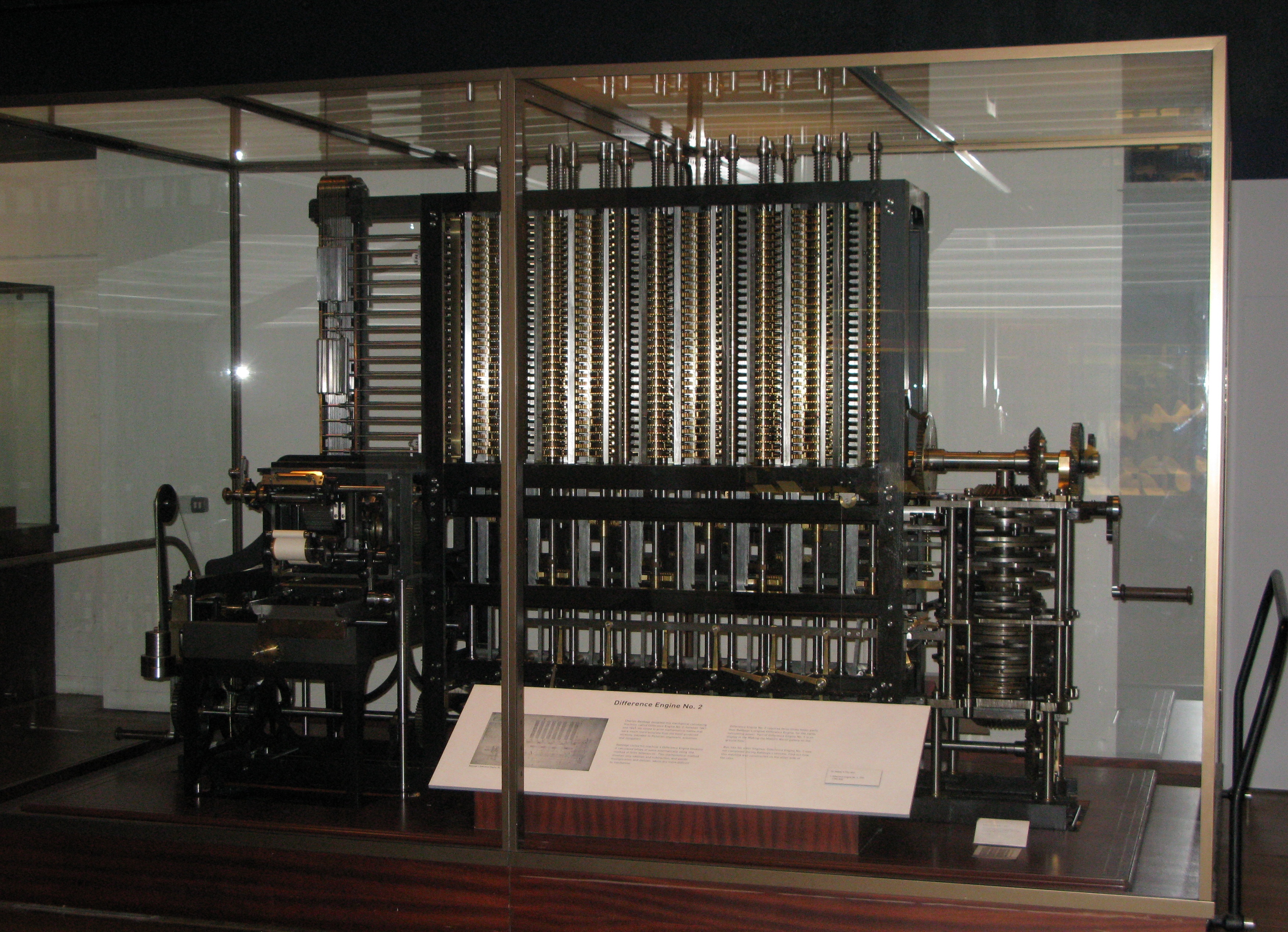

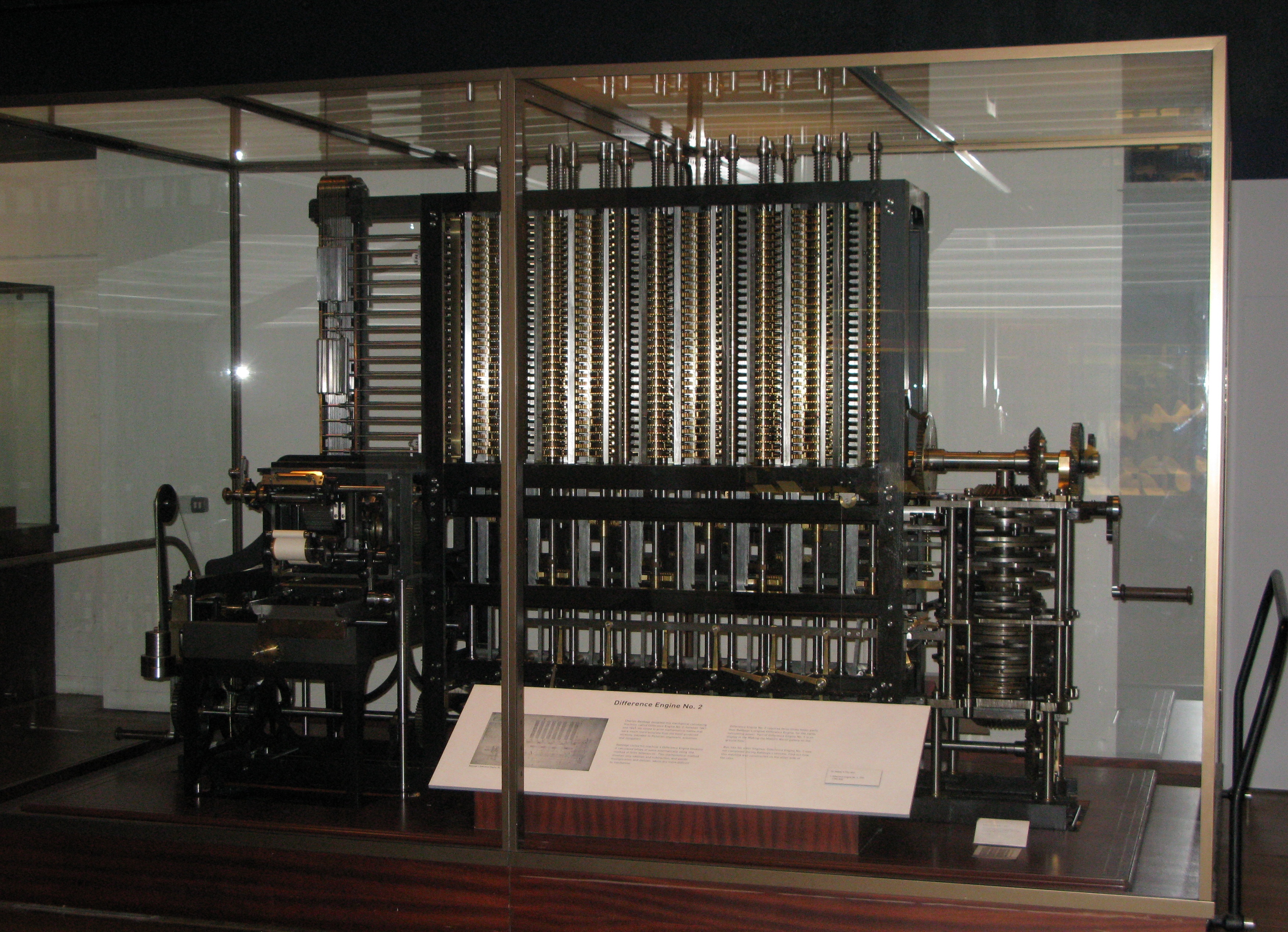

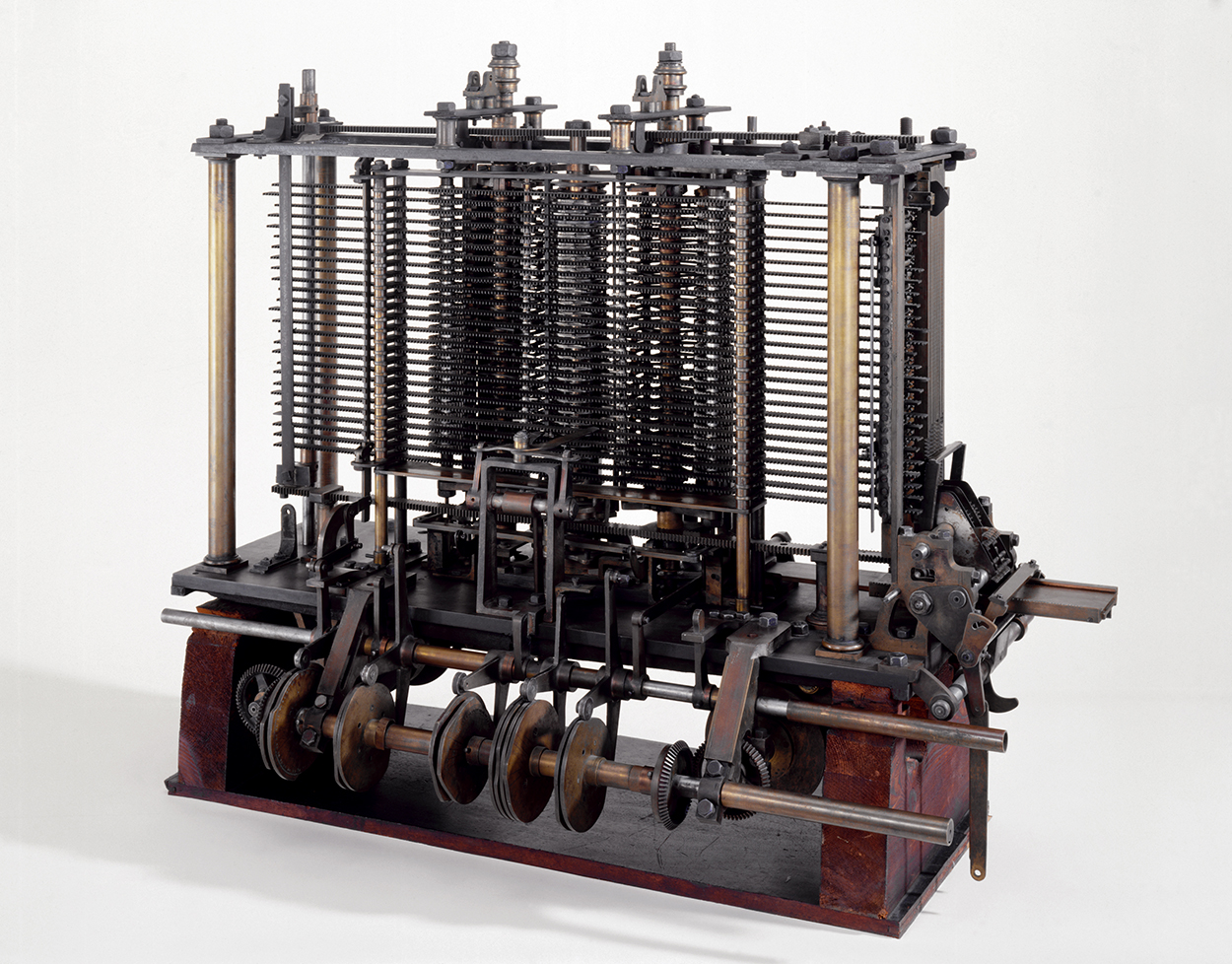

https://en.wikipedia.org/wiki/Charles_Babbage

The first true digital computer was designed by the English

mathematician,

Charles Babbage (1792–1871).

Babbage spent most of his life and fortune,

trying to build his “analytical engine.”

He never got it working properly,

because it was purely mechanical.

The technology of his day could not produce parts,

the required wheels, gears, and cogs to the high precision that he

needed.

The analytical engine did not have an operating system…

https://www.youtube.com/watch?v=FU_YFpfDqqA&t=878

https://en.wikipedia.org/wiki/Vacuum-tube_computer

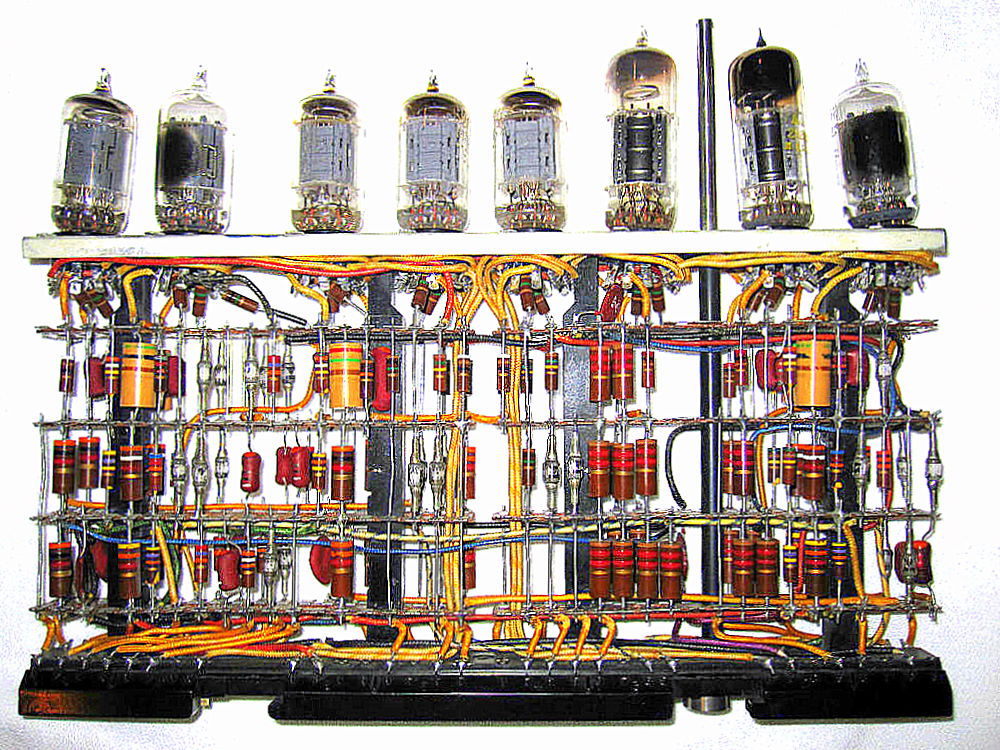

Little progress was made in constructing digital computers until World

War II.

Around the mid-1940s, efforts succeeded in building calculating

engines.

The first ones used mechanical relays, but were very slow,

with cycle times measured in seconds.

Relays were later replaced by vacuum tubes.

These machines were enormous,

filling up entire rooms with tens of thousands of vacuum tubes.

A single group of people did everything,

designed, built, programmed, operated, and maintained each

machine.

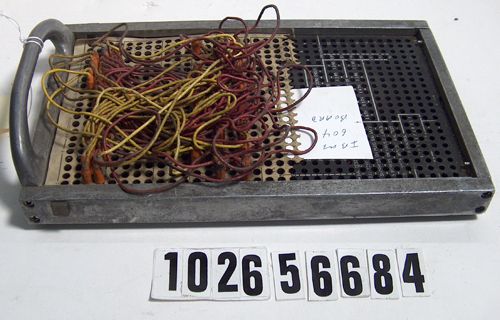

All programming was done in absolute machine language,

often by wiring up plugboards to control the machine’s basic

functions.

https://en.wikipedia.org/wiki/Plugboard

Moving those wires around was programming…

Programming languages were unknown (even assembly language was

unknown).

Operating systems were still unheard of.

The usual mode of operation was:

for the programmer to sign up for a block of time on the sign-up sheet

on the wall,

then come down to the machine room,

insert his or her plugboard into the computer,

and spend the next few hours hoping,

that none of the 20,000 or so vacuum tubes would burn out during the

run.

How to program ENIAC:

https://en.wikipedia.org/wiki/ENIAC#Programming

https://www.youtube.com/watch?v=AyxZsNq1nIA

Core rope memory (the image on Canvas):

https://www.youtube.com/watch?v=AwsInQLmjXc

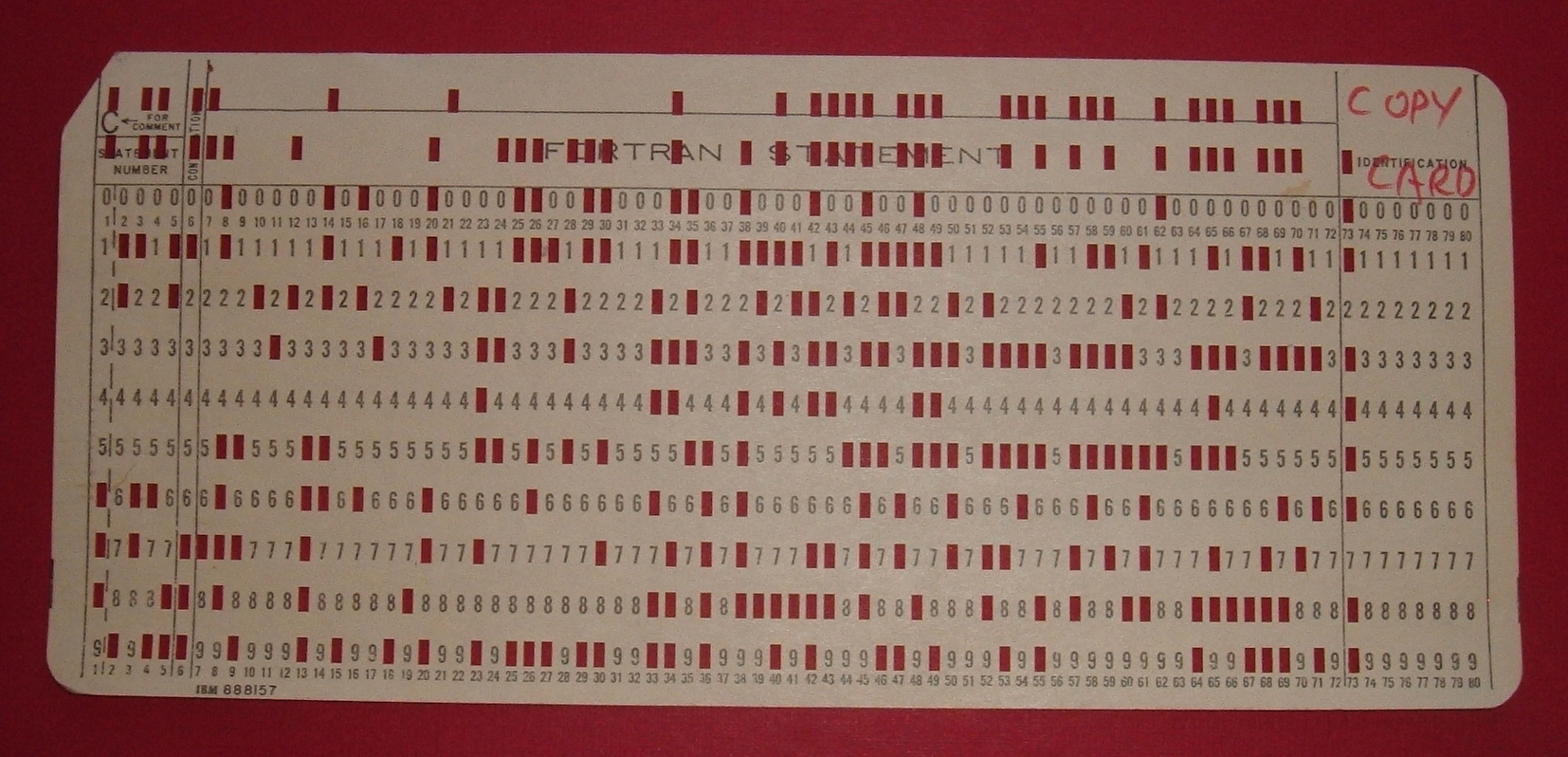

https://en.wikipedia.org/wiki/Punched_card

https://en.wikipedia.org/wiki/Punched_card_input/output

https://www.youtube.com/watch?v=KG2M4ttzBnY

Then, most problems were numerical calculations,

such as computing tables of sines, cosines, and logarithms.

By the early 1950s,

it was now possible to write programs on cards,

and read them in instead of using plugboards;

otherwise, the procedure was the same.

The transistor in the mid-1950s changed the picture radically.

There was a clear separation between:

designers, builders, operators, programmers, and maintenance

personnel.

Mainframes were very expensive.

To run a job (i.e., a program or set of programs),

a programmer would first write the program on paper

(in FORTRAN or possibly even in assembly language),

then punch it on cards.

They would then bring the card deck down to the input room,

and hand it to one of the operators,

and wait until the output was ready.

When the computer finished whatever job it was currently

running,

an operator would go over to the printer,

and tear off the output and carry it over to the output room,

so that the programmer could collect it later.

Next, they would take another one of the card decks,

that had been brought from the input room, and read it in.

If another program was needed, such as the FORTRAN compiler,

then the operator would have to get it from a file cabinet,

and read it in.

Much computer time was wasted while operators were walking around the machine room.

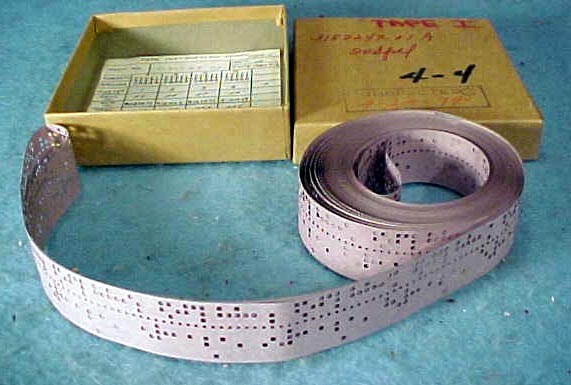

The solution generally adopted was the batch system.

The idea behind it was to collect a tray full of jobs in the input

room,

and then read them onto a magnetic tape using a small (relatively)

inexpensive computer.

The expensive computer that does calculations then took the tape.

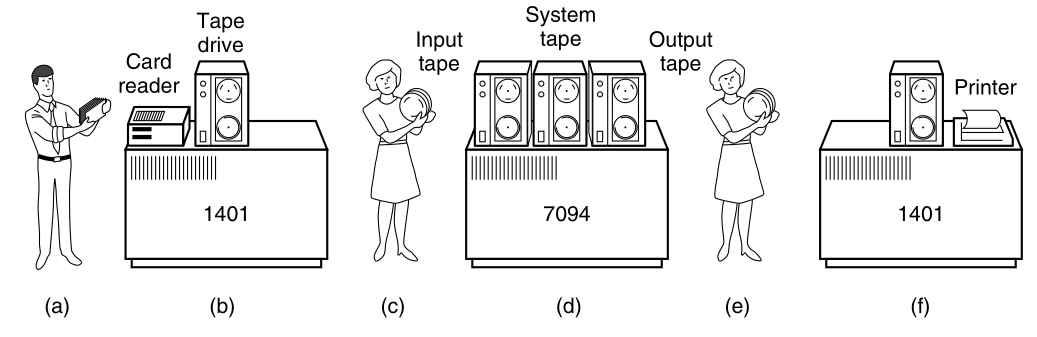

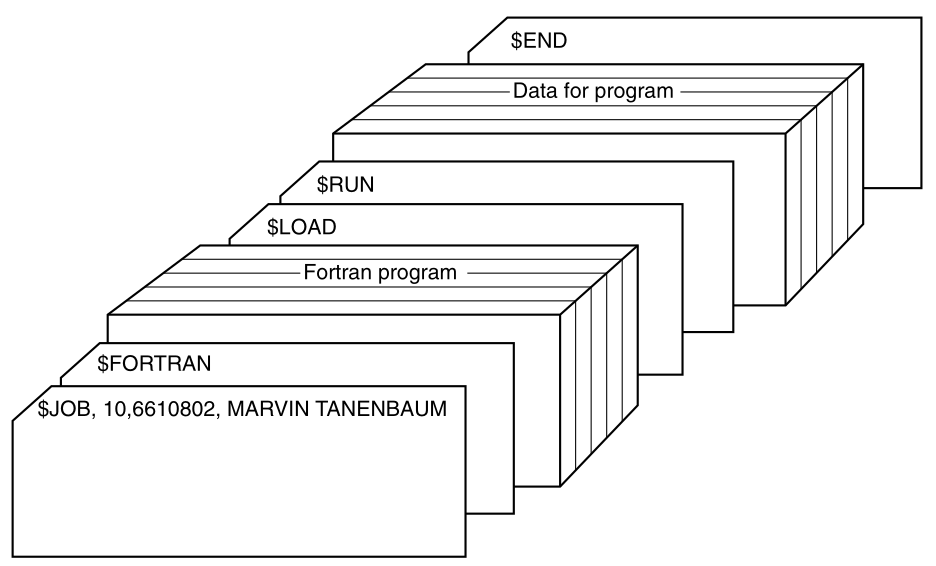

An early batch system.

(a) Programmers bring cards to 1401.

(b) 1401 reads batch of jobs onto tape.

(c) Operator carries input tape to 7094.

(d) 7094 does computing.

(e) Operator carries output tape to 1401.

(f) 1401 prints output.

After about an hour of collecting a batch of jobs,

the tape was rewound and brought into the machine room,

where it was mounted on a tape drive.

The operator then loaded a special program

(the ancestor of today’s operating system),

which read the first job from tape and ran it.

The output was written onto a second tape,

instead of being printed.

After each job finished,

the operating system automatically read the next job from the

tape,

and began running it.

When the whole batch was done,

the operator removed the input and output tapes,

replaced the input tape with the next batch,

and brought the output tape to a 1401 for printing off line

(i.e., not connected to the main computer).

The structure of a typical input job is shown above.

It started out with a $JOB card,

specifying the maximum run time in minutes,

the account number to be charged, and the programmer’s name.

Then came a $FORTRAN card,

telling the operating system to load the FORTRAN compiler from the

system tape.

It was followed by the program to be compiled,

and then a $LOAD card,

directing the operating system to load the object program just

compiled.

(Compiled programs were often written on scratch tapes and had to be

loaded explicitly.)

Next came the $RUN card,

telling the operating system to run the program with the data following

it.

Finally, the $END card marked the end of the job.

These primitive control cards were the forerunners of modern job

control languages and command interpreters.

Large second-generation computers were used mostly for:

scientific and engineering calculations,

such as solving the partial differential equations,

that often occur in physics and engineering.

They were largely programmed in FORTRAN and assembly language.

By the early 1960s, most computer manufacturers had two

distinct,

and totally incompatible, product lines;

On the one hand there were the word-oriented, large-scale scientific computers, such as the 7094, which were used for numerical calculations in science and engineering.

On the other hand, there were the character-oriented, commercial computers, such as the 1401, which were widely used for tape sorting and printing by banks and insurance companies.

Next, computers were designed to handle both:

scientific (i.e., numerical), and

commercial computing.

The strength of the “one family” idea was also its weakness.

It had to run on small systems, on very large systems,

doing doing weather forecasting and other heavy computing.

It had to be good on systems with few peripherals and on systems with

many peripherals.

It had to work in commercial environments and in scientific

environments.

The result was an enormous and extraordinarily complex operating

system,

probably two to three orders of magnitude larger than previous operating

systems.

It consisted of millions of lines of assembly language,

written by thousands of programmers,

and contained thousands upon thousands of bugs,

which necessitated a continuous stream of new releases,

in an attempt to correct them.

Each new release fixed some bugs and introduced new ones,

so the number of bugs probably remained constant in time.

Despite its enormous size and problems,

these OS’s actually satisfied most of their customers reasonably

well.

They also popularized several key techniques absent in second-generation operating systems:

(doing other tasks while waiting for IO):

Before, when the current job paused to wait for a tape or other I/O

operation to complete,

the CPU simply sat idle until the I/O finished.

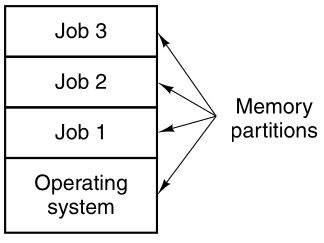

The solution that evolved was to partition memory into several

pieces,

with a different job in each partition.

While one job was waiting for I/O to complete,

another job could be using the CPU.

If enough jobs could be held in main memory at once,

the CPU could be kept busy nearly 100 percent of the time.

These computers had special hardware to protect each job,

against snooping and mischief by the other ones.

https://en.wikipedia.org/wiki/Spooling

Read cards onto the memory disk as soon as they were brought to the

computer room.

Then, whenever a running job finished,

the operating system could automatically load a new job.

This technique is called spooling

(from Simultaneous Peripheral Operation On Line),

and was also used for output.

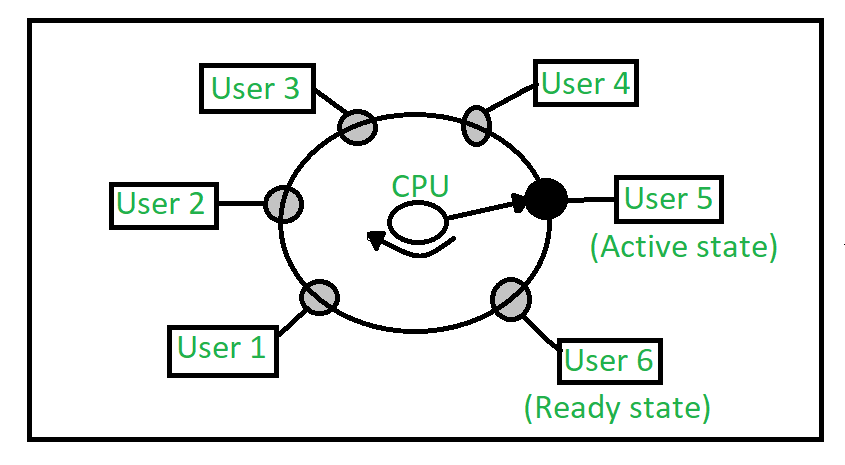

(fairly dividing up computing time across users):

https://en.wikipedia.org/wiki/Time-sharing

This desire for quick response time paved the way for

time-sharing,

a variant of multi-programming,

in which each user has an online terminal.

MIT, Bell Labs, and General Electric, then a major computer

manufacturer,

decided to embark on the development of a “computer utility,”

a machine that would support hundreds of simultaneous time-sharing

users.

Their model was the electricity distribution system.

When you need electric power,

you just stick a plug in the wall, and within reason,

as much power as you need will be there.

The system was known as MULTICS

(MULTiplexed Information and Computing Service).

Some of these persisted in use.

General Motors, Ford, and the U.S. National Security Agency, for

example,

only shut down their MULTICS systems in the late 1990s…

The last MULTICS running, at the Canadian Department of National

Defense, shut down in October 2000.

MULTICS had a huge influence on subsequent operating systems.

It also has a still-active Web site, www.multicians.org,

with a great deal of information about the system, its designers, and

its users.

One of the computer scientists at Bell Labs who had worked on the

MULTICS project,

Ken Thompson, subsequently found a small PDP-7 minicomputer,

that no one was using and set out to write a stripped-down,

one-user version of MULTICS.

This work later developed into the UNIX operating system,

which became popular in the academic world,

with government agencies, and with many companies.

See this UNIX historical background:

https://www.youtube.com/watch?v=tc4ROCJYbm0

https://pdos.csail.mit.edu/6.828/2023/readings/ritchie78unix.pdf

https://www.read.seas.harvard.edu/~kohler/class/aosref/ritchie84evolution.pdf

Because the source code was widely available,

various organizations developed their own (incompatible) versions, which

led to chaos.

Two major versions developed:

System V, from AT&T, and

BSD, (Berkeley Software Distribution) from the University of California

at Berkeley.

These had minor variants as well, now including FreeBSD, OpenBSD, and

NetBSD.

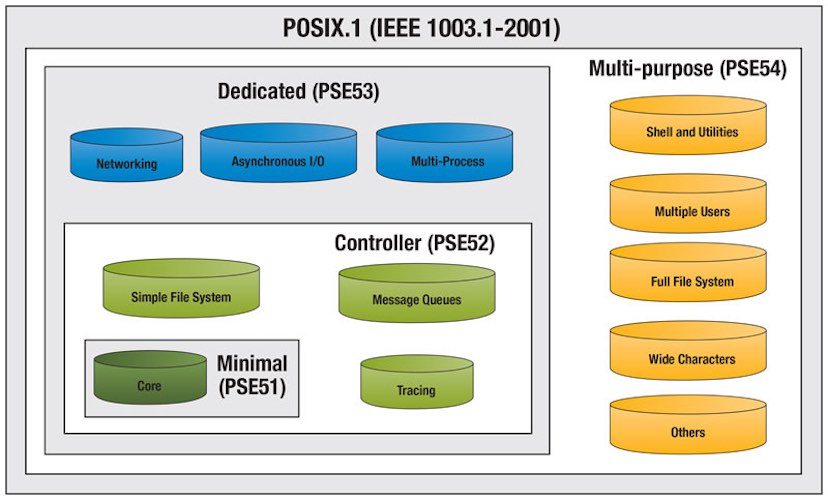

To make it possible to write programs that could run on any UNIX

system,

the IEEE developed a standard for UNIX, called POSIX,

that most versions of UNIX now support.

POSIX defines a minimal system call interface that conformant UNIX

systems must support:

https://unix.org/

https://en.wikipedia.org/wiki/Unix

https://en.wikipedia.org/wiki/POSIX

https://en.wikipedia.org/wiki/History_of_Unix

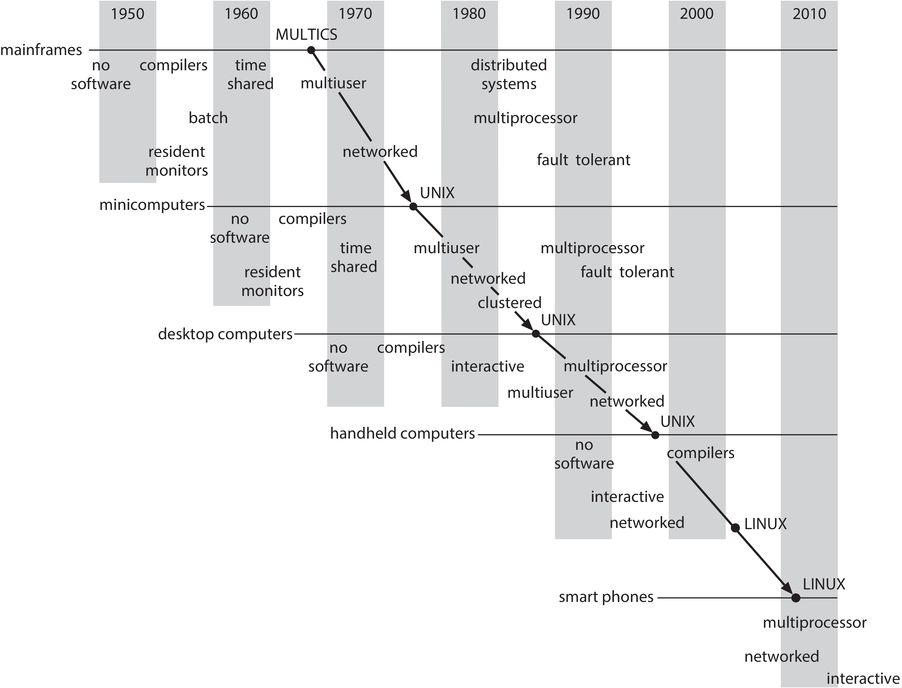

Show the image here.

With the development of LSI (Large Scale Integration) circuits,

chips containing thousands of transistors on a square centimeter of

silicon,

the age of the microprocessor-based personal computer dawned.

https://en.wikipedia.org/wiki/DOS

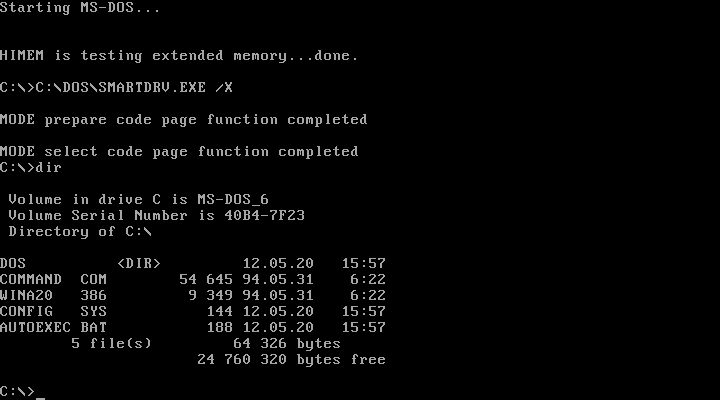

Microsoft offered IBM a package,

which included Microsoft’s BASIC and an operating system,

DOS (Disk Operating System) originally developed by another

company,

Microsoft, bought the product and hired the original author to improve

it.

The revised system was renamed MS-DOS (MicroSoft Disk Operating

System)

and quickly came to dominate the IBM PC market.

https://en.wikipedia.org/wiki/History_of_the_graphical_user_interface

Big players were Apple, then Windows, X Window system (Unix and

Linux).

CP/M, MS-DOS, and the Apple DOS were all command-line systems:

users typed commands at the keyboard.

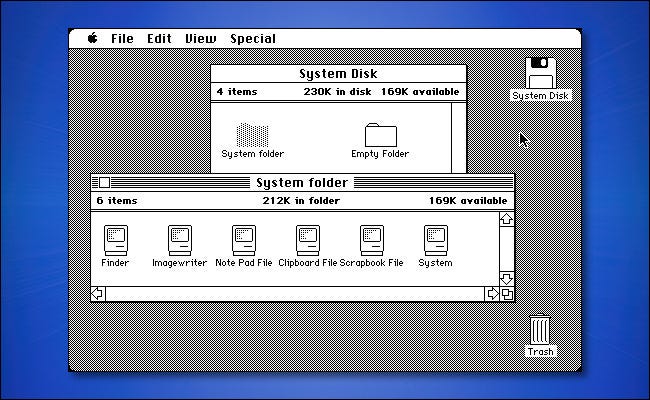

Years earlier, Doug Engelbart at Stanford Research Institute had

invented the GUI (Graphical User Interface), pronounced “gooey,”

complete with windows, icons, menus, and mouse.

Apple’s Steve Jobs saw the possibility of a truly user-friendly

personal computer

for users who knew nothing about computers and did not want to learn

(not much has changed, lol),

and the Apple Macintosh was announced in early 1984.

In 2001 Apple made a major operating system change,

releasing Mac OS X, with a new version of the Macintosh GUI,

on top of Berkeley UNIX.

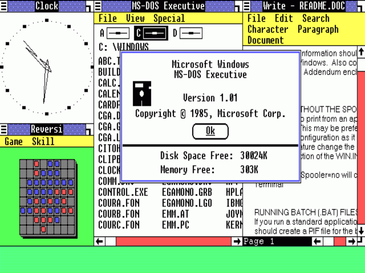

To compete with the Macintosh, Microsoft invented Windows.

Originally Windows was just a graphical environment on top of 16-bit

MS-DOS

(i.e., it was more like a shell than a true operating system).

However, current versions of Windows are descendants of Windows

NT,

a full 32-bit system, rewritten from scratch.

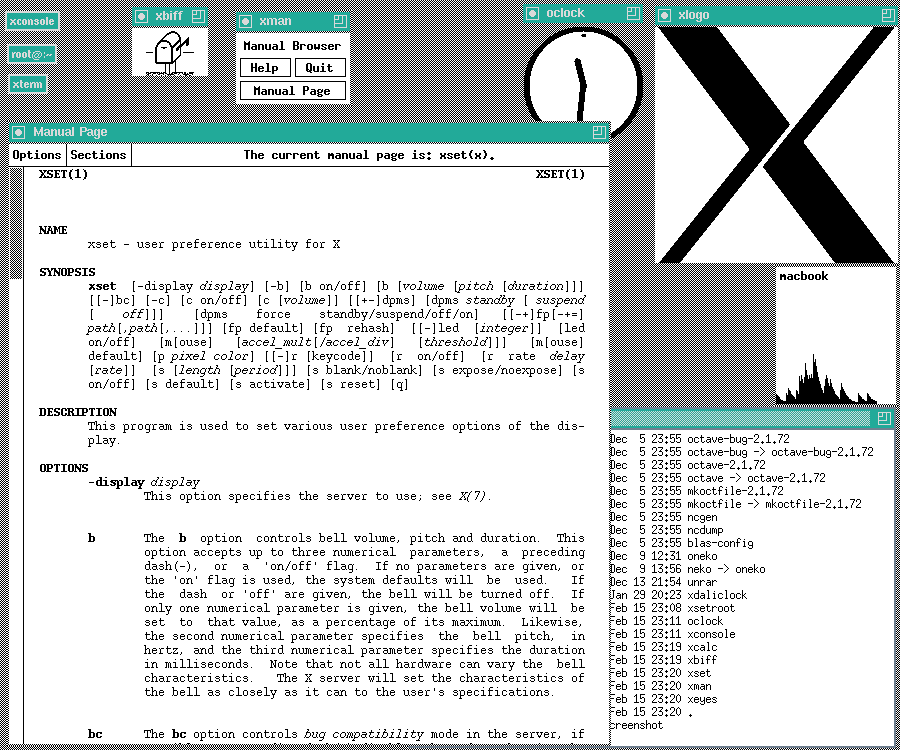

The other major contender in the personal computer world is

UNIX

(and its various derivatives).

Although many UNIX users, especially experienced programmers,

prefer a command-based interface to a GUI,

nearly all UNIX systems support a windowing system,

called the X Window system developed at M.I.T.

This system handles the basic window management,

allowing users to create, delete, move, and resize windows using a

mouse.

When UNIX was young (Version 6),

the source code was widely available, under AT&T license,

and frequently studied.

John Lions, of the University of New South Wales in Australia,

even wrote a little booklet describing its operation, line by line

(Lions, 1996).

http://www.lemis.com/grog/Documentation/Lions/

(comments)

https://minnie.tuhs.org/cgi-bin/utree.pl?file=V6 (UNIX

v6 source code)

https://pdos.csail.mit.edu/6.828/2005/readings/pdp11-40.pdf

(supplement to architecture)

https://pdos.csail.mit.edu/6.828/2005/pdp11/ (supplement

to architecture)

This booklet was used (with permission of AT&T) as a text in many university operating system courses.

When AT&T released Version 7,

it dimly began to realize that UNIX was a valuable commercial

product,

so it issued Version 7 with a restrictive license,

that prohibited the source code from being studied in courses,

in order to avoid endangering its status as a trade secret…

Many universities complied,

by simply dropping the study of UNIX, and teaching only theory.

Teaching only theory leaves the student with a lopsided view.

Theoretical topics usually covered deeply in courses and books on

operating systems,

such as scheduling algorithms,

are in practice not really that important.

Subjects that really are important,

such as I/O and file systems,

are generally neglected,

because there is little theory about them.

Then, Tanenbaum decided to write a new operating system from

scratch,

that would be compatible with UNIX from the user’s point of view.

The name MINIX stands for mini-UNIX,

because it is small enough that even a non-guru can understand how it

works.

In addition to the advantage of eliminating the legal problems,

MINIX had another advantage over UNIX.

It was written a decade after UNIX and was structured in a more modular

way.

The file system and the memory manager were not part of the operating

system,

but ran as user programs.

In the current release (MINIX3) this modularization has been extended to

the I/O device drivers,

which (with the exception of the clock driver) all run as user

programs.

The design of MINIX3 was inspired by the observations in the 1980s that:

Operating systems are becoming bloated, slow, and unreliable.

They crash far more often than other electronic devices,

such as televisions, cell phones, and DVD players,

and have so many features and options,

that practically nobody can understand them fully or manage them

well.

Computer viruses, worms, spyware, spam, and other forms of malware have become epidemic.

To a large extent, many of these problems are caused by a fundamental

design flaws.

Current operating systems lack modularity.

The entire operating system is typically millions of lines of C/C++

code,

compiled into a single massive executable program run in kernel

mode.

A bug in any one of those millions of lines of code can cause the system

to malfunction.

Getting all this code correct is impossible,

especially when about 70% consists of device drivers,

written by third parties,

and outside the purview of the people maintaining the operating

system.

Another difference is that UNIX was designed to be efficient;

MINIX was designed to be readable

(inasmuch as one can speak of any program hundreds of pages long as

being readable).

The MINIX code, for example, has thousands of comments in it.

MINIX was originally designed for compatibility with Version 7 (V7)

UNIX.

Version 7 was used as the model because of its simplicity and

elegance.

It is sometimes said that Version 7 was an improvement not only over all

its predecessors,

but also over all its successors.

Most “modern” books on operating systems are strong on theory and

weak on practice,

or they are largely superficial and high-level,

defining terms to memorize that correspond to basic concepts.

The Minix book aims to provide a better balance between the

two.

It covers all the fundamental principles in great detail,

including processes, inter-process communication, semaphores, monitors,

message passing,

scheduling algorithms, input/output, deadlocks, device drivers,

memory management, paging algorithms, file system design, security, and

protection mechanisms.

But it also discusses one particular system, MINIX3.

This arrangement allows the reader not only to learn the

principles,

but also to see how they are applied in a real operating system.

When the first edition of this book appeared in 1987,

it caused something of a small revolution in the way operating systems

courses were taught.

Until then, most courses had regressed to merely covering theory.

With the appearance of MINIX,

many schools began to have laboratory courses in which students examined

a real operating system,

to see how it worked inside.

Regrettably, in 2023, most OS courses are still too theory-heavy.

With the advent of POSIX, MINIX began evolving toward the new

standard,

while maintaining backward compatibility with existing programs.

The version of MINIX described in this book, MINIX3,

is based on the POSIX standard.

Like UNIX, MINIX was written in the C programming language,

and was intended to be easy to port to various computers.

MINIX3, a major redesign of the system,

greatly restructuring the kernel, reducing its size,

and emphasizing modularity and reliability.

The new version was intended both for PCs and embedded systems,

where compactness, modularity, and reliability are crucial.

The MINIX3 kernel is well under 4000 lines of executable code,

compared to millions of executable lines of code,

for Windows, Linux, FreeBSD, and other operating systems.

Small kernel size is important,

because kernel bugs are far more devastating than bugs in user-mode

programs,

and more code means more bugs.

Device drivers were moved out of the kernel in MINIX3;

they can do less damage in user mode.

Most of the comments about the MINIX3 system calls,

however (as opposed to comments about the actual code),

also apply to other UNIX systems.

This should be kept in mind when reading the text.

The MINIX3 kernel is only about 4000 lines of executable code,

not the millions found in Windows, Linux, Mac OS X, or FreeBSD.

The rest of the system, including all the device drivers (except the

clock driver),

is a collection of small, modular, user-mode processes,

each of which is tightly restricted in what it can do,

and with which other processes it may communicate.

https://en.wikipedia.org/wiki/History_of_Linux

Shortly after MINIX was released, a USENET newsgroup,

comp.os.minix, was formed to discuss it.

Within weeks, it had 40,000 subscribers,

most of whom wanted to add vast numbers of new features to MINIX,

to make it bigger and better (well, at least bigger).

Every day, several hundred of them offered suggestions, ideas,

and frequently snippets of source code.

The author of MINIX was able to successfully resist this onslaught

for several years,

in order to keep MINIX clean enough for students to understand,

and small enough that it could run on computers that students could

afford.

For people who thought little of MS-DOS,

the existence of MINIX (with source code) as an alternative,

was even a reason to finally go out and buy a PC.

One of these people was a Finnish student named Linus Torvalds.

Torvalds installed MINIX on his new PC and studied the source code

carefully.

Torvalds wanted to read USENET newsgroups,

(such as comp.os.minix) on his own PC,

rather than at his university,

but some features he needed were lacking in MINIX,

so he wrote a program to do that,

but soon discovered he needed a different terminal driver,

so he wrote that too.

Then he wanted to download and save postings,

so he wrote a disk driver, and then a file system.

By Aug. 1991 he had produced a primitive kernel.

On Aug. 25, 1991, he announced it on comp.os.minix.

This announcement attracted other people to help him,

and on March 13, 1994 Linux 1.0 was released.

Torvalds is still the:

https://en.wikipedia.org/wiki/Benevolent_dictator_for_life

of Linux…

https://en.wikipedia.org/wiki/List_of_Linux_distributions

Show this!

https://eylenburg.github.io/os_familytree.htm

https://github.com/torvalds/linux

Interestingly, Minix may actually be the world’s most installed

operating system.

It is the base of Intel’s management engine…

Linux is the basis of Android.

The Mach micro-kernel with UNIX running on top is the basis of Mac OS.

To review:

OS: No OS

All programming was done by experts in machine language,

without any support from an OS or any other system software.

Replaced vacuum tubes as smaller and faster switches.

OS: Batch OS

Programs were submitted in batches of punch cards.

The role of the OS was to automate the compilation, loading, and

execution of programs.

Multi-programming was developed, which allows the OS to schedule the

execution of jobs,

to make more efficient use of the CPU and other resources.

Allowed the development of microchips to replace individual transistors.

OS: Interactive multi-user OS.

Interrupts were developed, to allow the OS to enforce

time-sharing,

and to interact with keyboards and display terminals.

Increased capacity, and speed of memory and secondary storage

devices,

both imposed additional management tasks on the OS.

Allowed the placement of a complete microprocessor on a single

chip,

leading to the development of personal computers (PCs).

OS: Desktop and laptop OS.

The OS was responsible for all operations,

starting from the initial booting, to multitasking, scheduling,

interactions with various peripheral devices,

and keeping all information safe.

The emphasis was on user-friendliness,

including the introduction of the GUI.

Hardware enabled the harnessing of the power of multiple computers.

OS: OSs for supercomputers, distributed systems, and mobile devices.

The ability to create extremely powerful chips spawned several directions of development.

Supercomputers combined large numbers of processors,

and made the OS and other software responsible for exploiting the

increased computation power,

through parallel processing.

Computer networks gave rise to the Internet,

which imposed requirements of privacy and safety,

along with efficient communication.

Wireless networks led to the development of hand-held devices,

with additional demands on the OS.

Courses:

https://pdos.csail.mit.edu/6.828/

https://ocw.mit.edu/courses/6-828-operating-system-engineering-fall-2012/

00-History/book-riscv-rev3.pdf

https://pdos.csail.mit.edu/6.828/2023/xv6.html

https://en.wikipedia.org/wiki/Xv6

Rust implementations of xv6:

https://github.com/dancrossnyc/rxv64

https://github.com/Ko-oK-OS/xv6-rust

https://github.com/Jaic1/xv6-riscv-rust

https://github.com/o8vm/octox

https://projectoberon.net/

https://en.wikipedia.org/wiki/Oberon_(operating_system)

Book and code are available from Wirth’s site:

https://people.inf.ethz.ch/wirth/ProjectOberon/index.html

There is quite comprehensive documentation on wikibooks:

https://en.wikibooks.org/wiki/Oberon

You can run it in a browser:

https://schierlm.github.io/OberonEmulator/

You can run it on Linux:

http://oberon.wikidot.com/

https://en.wikipedia.org/wiki/Not_Another_Completely_Heuristic_Operating_System

https://homes.cs.washington.edu/~tom/nachos/

https://xinu.cs.purdue.edu/

https://en.wikipedia.org/wiki/Xinu

https://en.wikipedia.org/wiki/Plan_9_from_Bell_Labs

00-History/plan9_intro.pdf

A kernel written from scratch in Rust,

with inspiration from Minix, Plan9, SeL4, etc.

https://en.wikipedia.org/wiki/Redox_(operating_system)

https://www.redox-os.org/

https://github.com/llenotre/maestro

https://blog.lenot.re/a/introduction

https://github.com/asterinas/asterinas

https://www.cs.bham.ac.uk/~exr/lectures/opsys/10_11/lectures/os-dev.pdf

https://samypesse.gitbook.io/how-to-create-an-operating-system

https://github.com/SamyPesse/How-to-Make-a-Computer-Operating-System

How to develop an OS yourself:

https://wiki.osdev.org/Main_Page

Not theory, but practical development itself.

https://littleosbook.github.io/