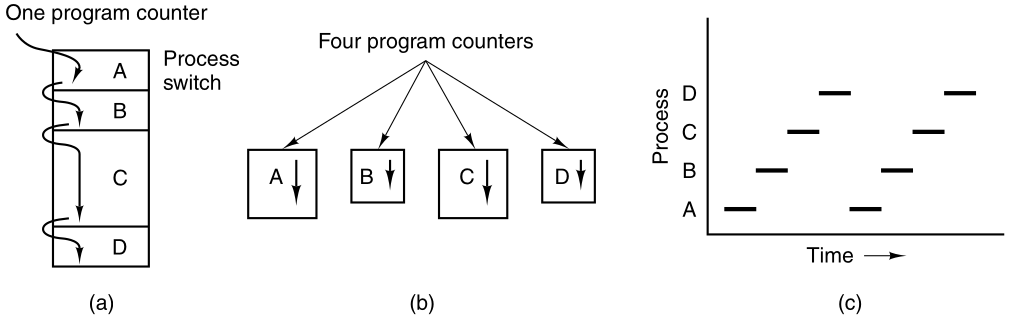

(a) We see a computer multiprogramming four programs in memory.

The most central concept in any operating system is the

process:

an abstraction of a running program.

Everything else hinges on this concept.

Multi-tasking

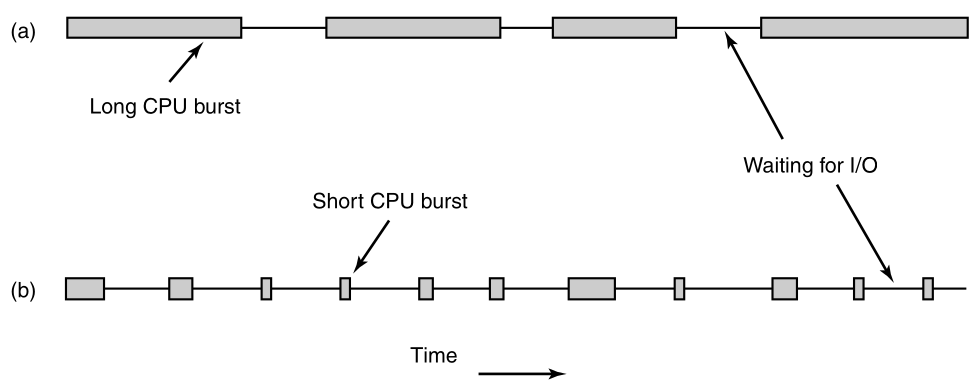

All modern computers can do several things at the same time.

While running a user program,

a computer can also be reading from a disk,

and outputting text to a screen, etc.

The CPU also switches from program to program,

running each for tens or hundreds of milliseconds.

Pseudo-parallelism

While, strictly speaking, at any instant of time,

the CPU is running only one program,

in the course of 1 second, it may work on several programs,

thus giving the users the illusion of parallelism.

Sometimes people speak of pseudo-parallelism in this context,

to contrast it with the true hardware parallelism of multiprocessor

systems

(which have two or more CPUs sharing the same physical memory).

Keeping track of multiple, parallel activities is hard for people to

do.

Operating system designers designed an evolving conceptual model,

(sequential processes) that makes parallelism easier to deal with.

All the runnable software on the computer,

sometimes including the operating system,

is organized into a number of sequential processes.

A process is just an executing program, including:

current values of the program counter register, other registers, and

variables.

https://en.wikipedia.org/wiki/Computer_multitasking

Conceptually, each process has its own virtual CPU.

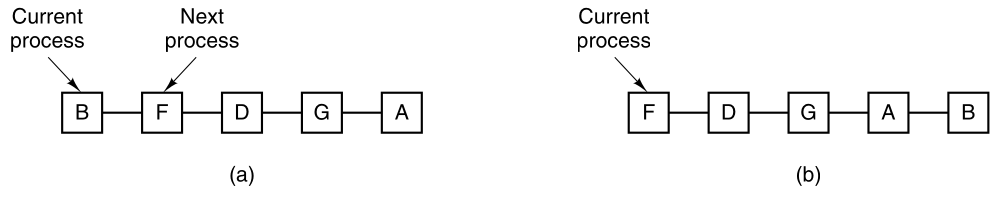

The real CPU switches back and forth from process to process.

It is much easier to think about a collection of processes running in

(pseudo) parallel,

than to try to keep track of how the CPU switches from program to

program.

This rapid switching back and forth is called multiprogramming.

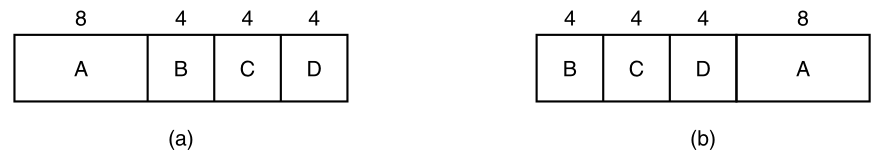

(a) We see a computer multiprogramming four programs in memory.

Conceptual model of four independent, sequential processes.

We see four processes, each with its own flow of control

(i.e., its own program counter register),

and each one running independently of the other ones.

Of course, there is only one physical program counter register,

so when each process runs,

its logical program counter is loaded into the real program counter

register.

When it is finished for the time being,

the physical program counter register is saved in the process’ logical

program counter in memory.

Only one program is active at any instant.

We see that viewed over a long enough time interval,

all the processes have made progress,

but at any given instant only one process is actually running.

With the CPU switching back and forth among the processes,

the rate at which a process performs its computation will not be

uniform,

and probably not even reproducible, if the same processes are run

again.

Processes must not be programmed with built-in assumptions about

timing.

Consider an I/O process that starts a tape to restore backed up

files,

executes an idle loop 10,000 times to let it get up to speed,

and then issues a command to read the first record.

If the CPU decides to switch to another process during the idle

loop,

then the tape process might not run again,

until after the first record was already past the read head.

When a process has critical real-time requirements,

that is, particular events must occur within a specified number of

milliseconds,

special measures must be taken to ensure that they do occur.

Normally, however, most processes are not affected by the underlying

multiprogramming of the CPU,

or the relative speeds of different processes.

The difference between a process and a program is subtle, but

crucial.

A process is an activity of some kind.

It has a program, input, output, and a state.

A single processor may be shared among several processes,

with some scheduling algorithm being used,

to determine when to stop work on one process and service a different

one.

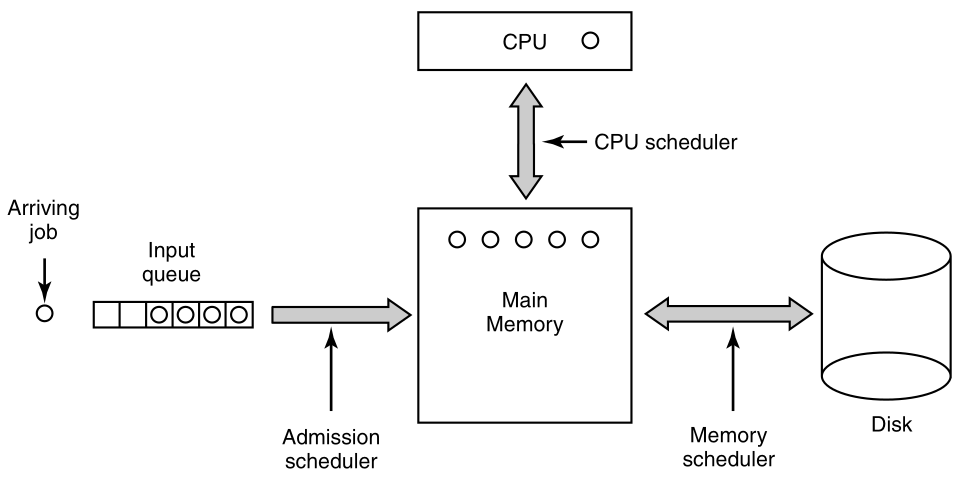

Operating systems need some way to make sure all the necessary processes exist.

Simple embedded systems

In very simple systems, or in systems designed for running only a single

application

(e.g., controlling a device in real time),

it may be possible to have all the processes that will ever be

needed,

be present when the system comes up.

General purpose systems

Some way is needed to create and terminate processes,

as needed during operation.

There are four principal events that cause processes to be

created:

1) System initialization.

2) Execution of a process creation system call by a running

process.

3) A user request to create a new process.

4) Initiation of a batch job.

When an operating system is booted,

often several processes are created.

Some of these are foreground processes, that is,

processes that interact with (human) users and perform work for

them.

Others are background processes,

which are not associated with particular users,

but instead have some specific function.

For example, web server:

A background process may be designed to accept incoming requests,

for web pages hosted on that machine,

waking up when a request arrives to service the request.

Processes that stay in the background to handle some activity,

such as web pages, printing, and so on are called daemons.

Large systems commonly have dozens of them.

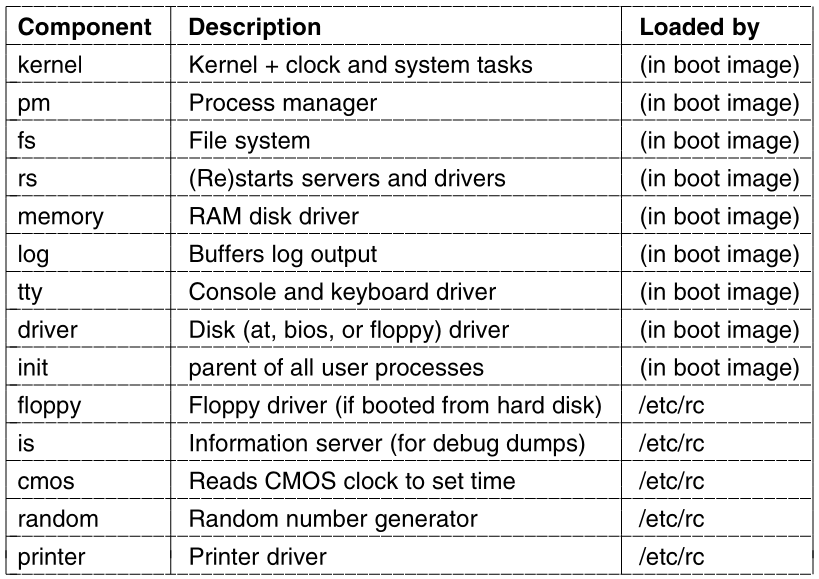

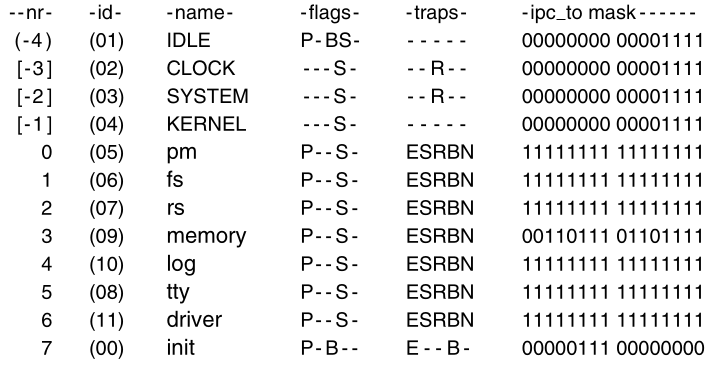

In MINIX3, the ps program can be used to list the running

processes:

ps

In addition to the processes created at boot time,

new processes can be created afterward as well.

Often a running process will issue system calls,

to create one or more new processes to help it do its job.

Creating new processes is particularly useful under the condition

that,

the work to be done can easily be formulated in terms of several

related,

but otherwise independent, interacting processes.

Compiler example

For example, when compiling a large program,

the make program invokes the C compiler,

to convert source files to object code,

and then it invokes the install program,

to copy the program to its destination,

set ownership and permissions, etc.

In MINIX3, the C compiler itself is actually several different programs,

which work together.

These include a pre-processor, a C language parser,

an assembly language code generator, an assembler, and a linker.

In interactive systems, users can start a program by typing a

command.

Virtual consoles allow a user to start a program,

say a compiler, and then switch to an alternate console, and start

another program,

perhaps to edit documentation while the compiler is running.

MINIX3 supports four virtual terminals.

You can switch between them using ALT+F1 through

ALT+F4.

The last situation in which processes are created,

applies only to the batch systems found on large mainframes /

HPCs.

Here users can submit batch jobs to the system (possibly

remotely).

When the operating system decides that it has the resources to run

another job,

it creates a new process, and in it, runs the next job from the input

queue.

Technically, in all these cases, a new process is created,

by having an existing process execute a process creation system

call.

That process may be:

a running user process,

a system process invoked from the keyboard or mouse, or

a batch manager process.

What that process does is execute a system call to create the new

process.

This system call tells the operating system to create a new

process,

and indicates, directly or indirectly, which program to run in it.

In MINIX3, there is only one system call to create a new

process:

fork

This call creates an exact clone of the calling process.

After the fork, the two processes, the parent and the child,

have the same memory image, the same environment strings, and the same

open files.

That is all there is.

Usually, the child process then executes execve or a

similar system call,

to change its memory image and run a new program.

For example, when a user types a command to the shell, for

example:

sort

the shell forks off a child process,

and the child executes sort.

Why fork, then execute?

The two-step process allows the child to:

manipulate its file descriptors after the fork, but before the

execve,

to accomplish redirection of standard input, standard output, and

standard error.

Memory is mostly separate between parent and child

processes.

In both MINIX3 and UNIX, after a process is created,

both the parent and child have their own distinct address spaces.

If either process changes a word in its address space,

the change is not visible to the other process.

The child’s initial address space is a copy of the parent’s,

but there are two distinct address spaces involved;

no writable memory is shared

Like some UNIX implementations,

MINIX3 can share the program text between the two,

since that cannot be modified.

A newly created process can share some of its creator’s other resources,

such as open files.

After a process has been created,

it starts running and does whatever its job is.

A process usually terminates due to one of the following

conditions:

1) Normal exit (voluntary).

2) Error exit (voluntary).

3) Fatal error (involuntary).

4) Killed by another process (involuntary).

Most processes terminate because they have done their work.

When a compiler has compiled the program given to it,

the compiler executes a system call to tell the operating system that it

is finished.

This system call is exit in MINIX3.

Screen-oriented programs also support voluntary termination.

For example, editors have a key combination the user can invoke,

to tell the process to save the working file,

remove any temporary files that are open, and terminate.

An error caused by the process, perhaps due to a program bug.

Examples include:

executing an illegal instruction, referencing nonexistent memory, or

dividing by zero.

In MINIX3, a process can tell the operating system that it wishes to

handle certain errors itself,

in which case the process is signaled (interrupted) instead of

terminated,

when one of the errors occurs.

A process discovers a fatal error.

For example, if a user types the command:

cc foo.c

to compile the program foo.c and no such file exists,

the compiler simply exits.

One process can execute a system call telling the OS to kill another

process.

In MINIX3, this call is:

kill

Of course, the killer must have the necessary authorization to kill the

killee.

Inherited death?

In some systems, when a process terminates, either voluntarily or

otherwise,

all processes it created are immediately killed as well.

MINIX3 does not work this way, however.

In some systems, when a process creates another process,

the parent and child continue to be associated in certain ways.

The child can itself create more processes, forming a process

hierarchy.

A process has only one parent (but zero, one, two, or more

children).

Signaling process groups:

In MINIX3, a process, its children, and further descendants,

together may form a process group.

When a user sends a signal from the keyboard,

the signal may be delivered to all members of the process group,

currently associated with the keyboard

(usually all processes that were created in the current window).

This is signal-dependent.

If a signal is sent to a group, each process can:

catch the signal,

ignore the signal, or

take the default action (to be killed by the signal).

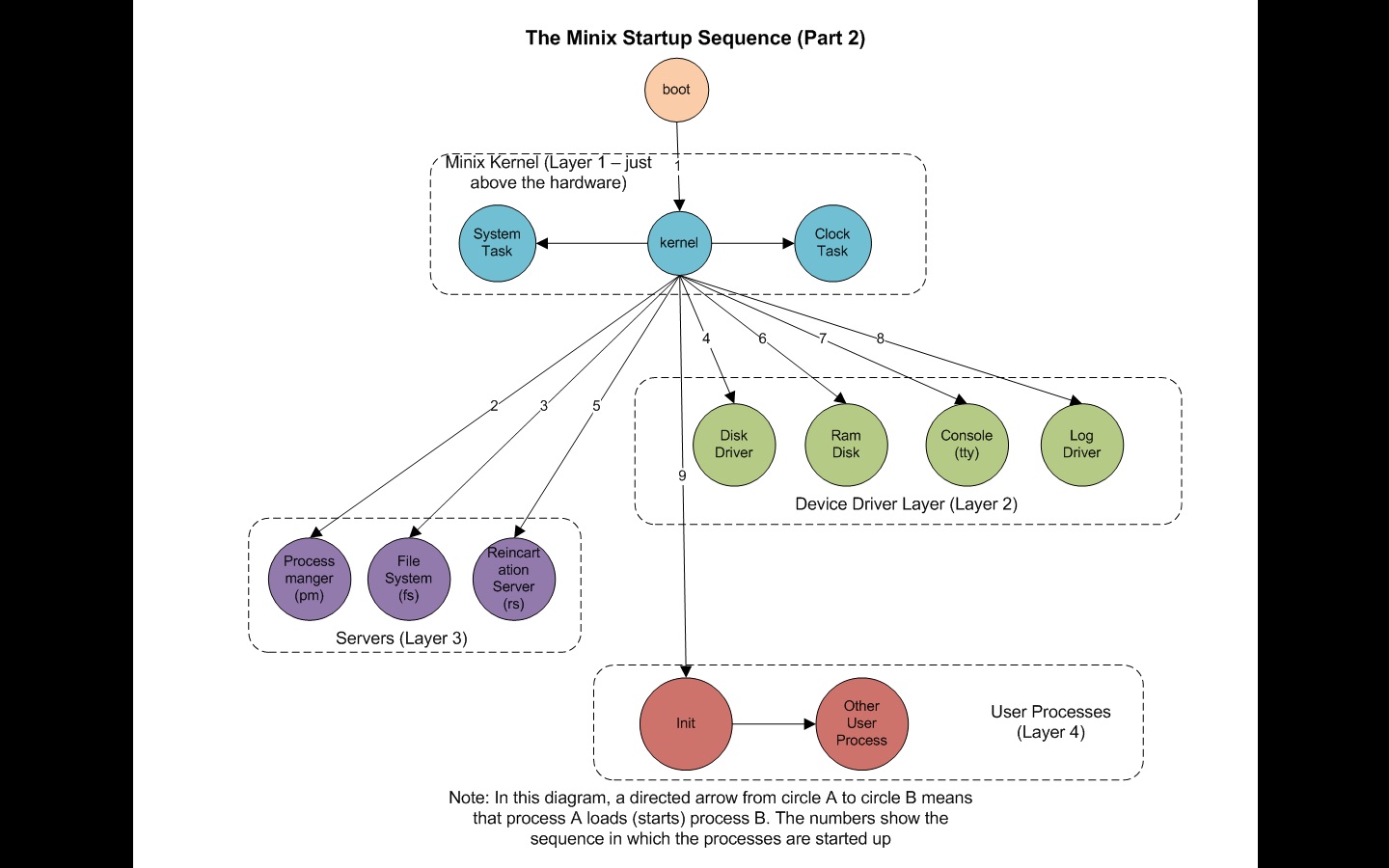

As a simple example of how process trees are used,

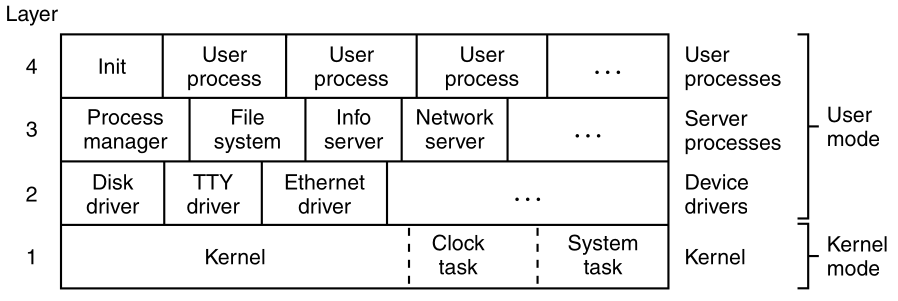

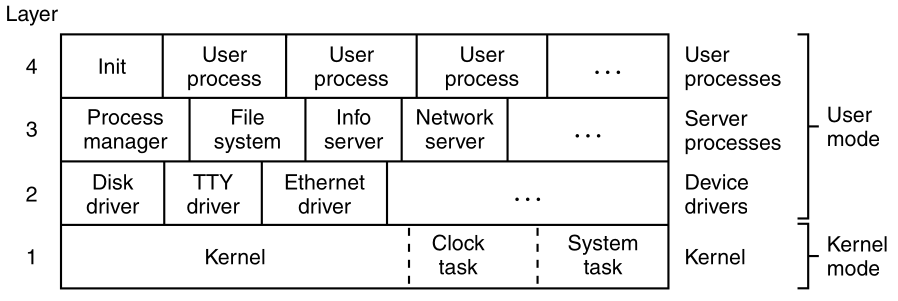

let us look at how MINIX3 initializes itself.

Two special programs, the reincarnation server, and

init, are present in the boot image.

Reincarnation server

The reincarnation server’s job is to (re)start drivers and

servers.

It begins by blocking, waiting for a message telling it what to

create.

Init

In contrast, init executes the /etc/rc

script,

that causes it to issue commands to the reincarnation server,

to start the drivers and servers not present in the boot image.

Next, init manages all the terminals.

It reads a configuration file /etc/ttytab,

to see which terminals and virtual terminals exist.

init forks a getty process for each one,

displays a login prompt on it,

and then waits for input.

For each terminal,

when a username is typed,

getty execs a login process with the username as its

argument.

If the user succeeds in logging in,

then login will exec the user’s shell.

So the shell is a child of init.

User commands create children of the shell,

which are grandchildren of init.

Parent-driven init enables restarting failed

processes:

This procedure makes sure the drivers and servers are started as

children of the reincarnation server,

so if any of them ever terminate,

the reincarnation server will be informed and can restart (i.e.,

reincarnate) them again.

This allows MINIX3 to tolerate a driver or server crash,

because a new one will be started automatically.

+++++++++++++++++ Cahoot-02-1

Each process has it’s own data, including:

program counter register, general purpose registers, stack, open files,

alarms, and other internal state,

Data needs to be moved between processes.

Processes often need to interact, communicate, and synchronize with

other processes.

One process may generate some output,

that another process should use as input.

Example: grep may be ready before cat is done.

In the shell command

cat chapter1 chapter2 chapter3 | grep tree

the first process, running cat, concatenates and outputs

three files.

The second process, running grep,

selects all lines containing the word tree.

Depending on the relative speeds of the two processes

(which depends on both the relative complexity of the programs,

and how much CPU time each one has had),

it may happen that grep is ready to run,

but there is no input waiting for it.

It must then block until some input is available.

When a process blocks, it does so because logically it cannot

continue,

typically because it is waiting for input that is not yet available.

It is also possible for a process that is conceptually ready and able

to run,

to be stopped because the operating system has decided to allocate the

CPU to another process for a while.

These two conditions are completely different.

In the first case, the waiting (blocking) is inherent in the

problem

(you cannot process the user’s command line until it has been

typed).

In the second case, it is a technicality of the scheduling

system.

There are not enough CPUs to give each process its own private

processor.

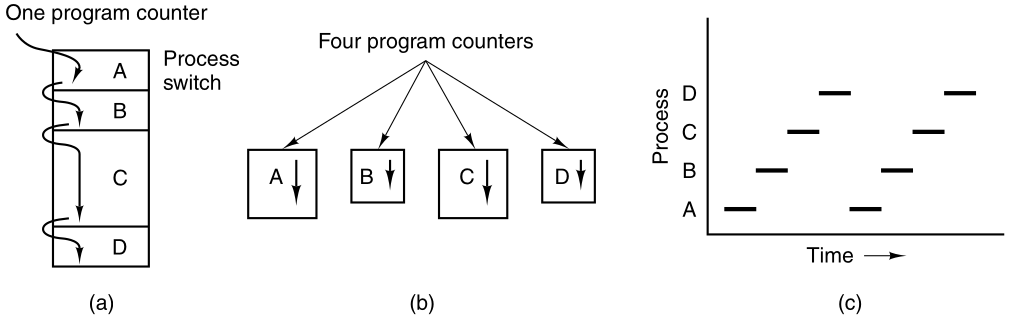

Processes transition between three states:

1) Running (actually using the CPU at that instant).

2) Ready (runnable; temporarily stopped to let another process

run).

3) Blocked (unable to run until some external event happens).

Running versus ready:

The first two states are similar.

In both running and ready states, the process is willing to run.

In ready, there is temporarily no CPU available for it.

Blocked:

The blocked state is different from the first two.

The process cannot run because it is waiting on something,

even if the CPU has nothing else to do.

A process can be in running, blocked, or ready state.

Transitions between these states are as shown.

Four transitions are possible among these three states, as shown.

A running process discovers that it cannot continue.

In MINIX3,

when a process reads from a pipe or special file

(e.g., a terminal) and there is no input available,

the process is automatically moved from the running state to the blocked

state.

In some systems, a process must execute a system call,

block or pause to get into blocked state.

are caused by the process scheduler,

a part of the operating system,

without the process even knowing about them.

Transition 2: Running to ready

occurs when the scheduler decides that the running process has run long

enough,

and it is time to let another process have some CPU time.

Transition 3: Ready to running

occurs when all the other processes have had their fair share,

and it is time for the first process to get the CPU to run again.

Scheduling

decide which process should run when and for how long.

Many algorithms have been devised,

to try to balance the competing demands of efficiency for the system as

a whole,

and fairness to individual processes.

occurs when the external event for which a process was waiting

(e.g., the arrival of some input) happens.

If no other process is running then,

transition 3 will be triggered immediately,

and the process will start running.

Otherwise it may have to wait in ready state for a little while,

until the CPU is available.

Some of the processes run programs that carry out commands typed in

by a user.

Other processes are part of the system,

and handle tasks such as carrying out requests for file services,

or managing the details of running a disk or a tape drive.

Example: disk access

When a disk interrupt occurs,

the system may make a decision to stop running the current

process,

and run the disk process,

which was blocked waiting for that interrupt.

We say “may” because it depends upon relative priorities,

of the running process and the disk driver process.

Instead of thinking about interrupts,

we can think about user processes, disk processes, terminal processes,

and so on,

which block when they are waiting for something to happen.

When the disk block has been read, or the character typed,

the process waiting for it is unblocked,

and is eligible to run again.

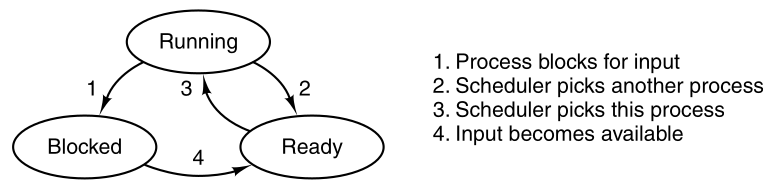

The scheduler is at the lowest level of abstraction of the OS,

with a variety of abstracted processes on top of it.

All the interrupt handling, and details of actually starting and

stopping processes,

are hidden away in the scheduler, which is actually quite small.

The rest of the operating system is nicely structured in process

form.

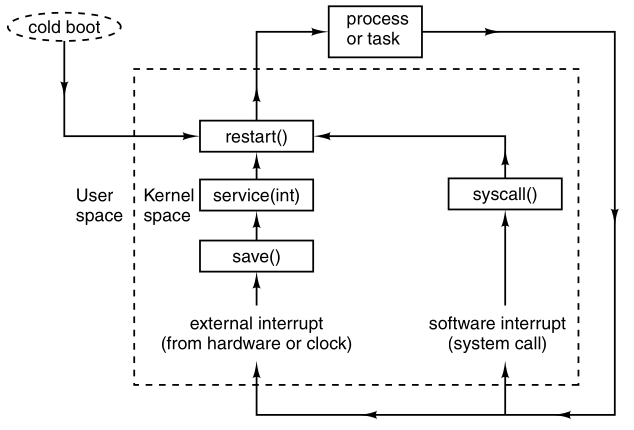

The lowest layer of a process-structured operating system handles

interrupts and scheduling.

Above that layer, sequential processes exist.

The “scheduler” is not the only thing in the lowest abstraction

layer,

there is also support for interrupt handling and inter-process

communication.

++++++++++++ Cahoot-02-2

When the process is switched from running to ready state,

it can be restarted later, as if it had never been stopped.

However, it’s resources are stored in central locations (registers,

etc.).

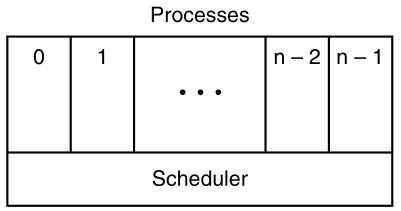

To implement the process,

the operating system maintains a process table,

with one entry per process.

Some authors call these entries process control blocks.

Each entry in the table includes everything about the process that

must be saved, including:

its program counter registers, general purpose registers, stack pointer,

memory allocation, the status of its open files, its accounting and

scheduling information, alarms, and other signals.

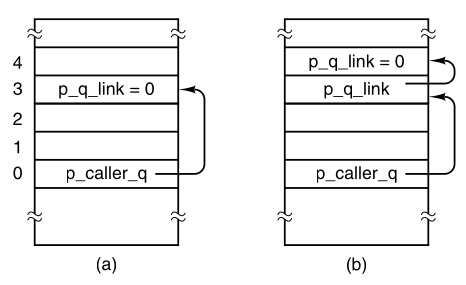

In MINIX3, inter-process communication, memory management, and file

management,

are each handled by separate modules within the system,

so the process table is partitioned,

with each module maintaining the fields that it needs.

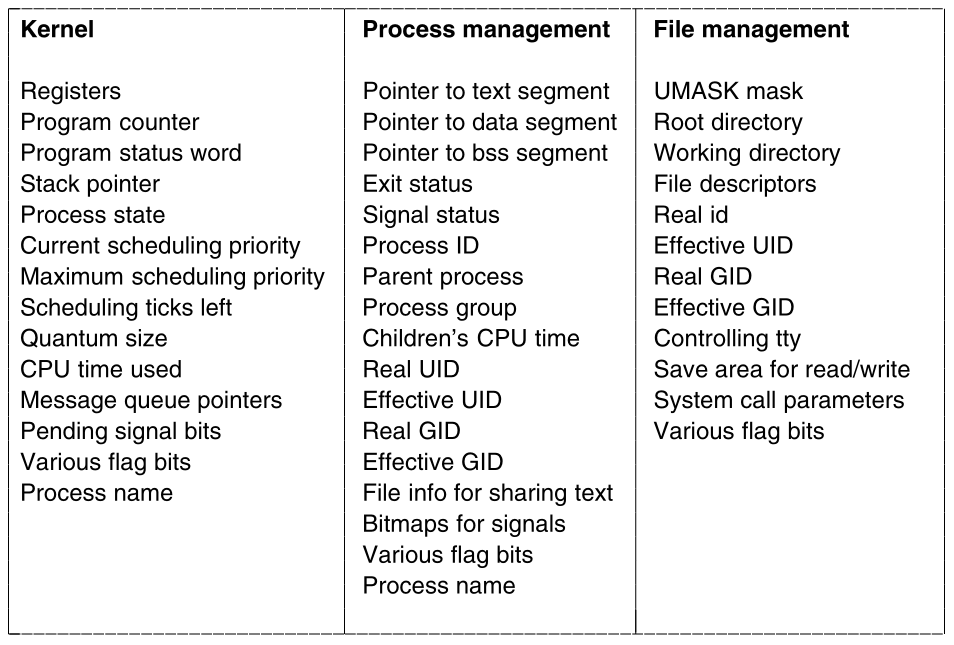

The image below shows some important fields in the process

table.

The fields in the first column are the only ones relevant to this

section.

The 2nd two columns illustrate information is needed elsewhere in the

system:

Demonstrate:

Show the actual process table in running Minix3.

The illusion of multiple sequential processes is maintained,

on a machine with one CPU and many I/O devices.

Now we describe the “scheduler” works in MINIX3,

but most modern operating systems work essentially the same way.

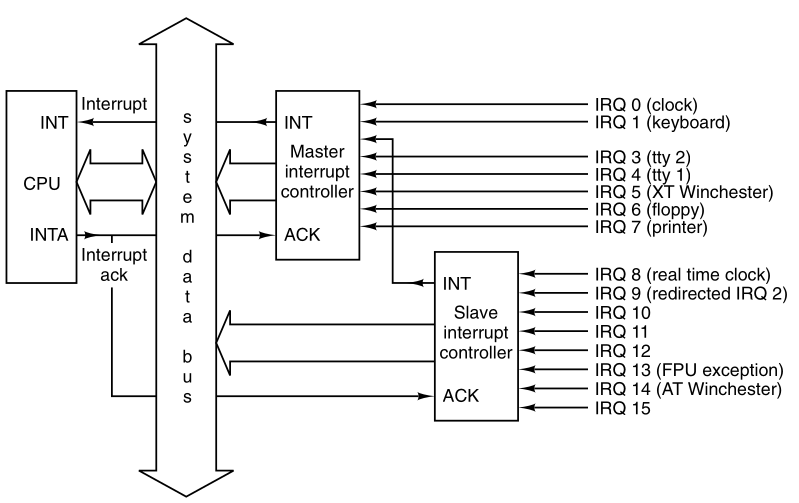

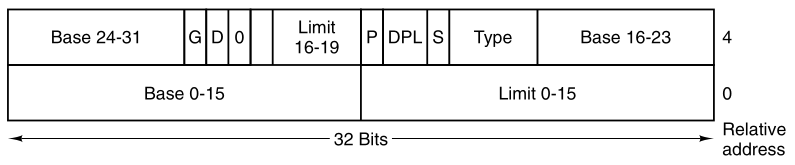

Associated with each I/O device class

(e.g., floppy disks, hard disks, timers, terminals)

is a data structure in a table called the interrupt descriptor

table.

The most important part of each entry in this table is called the

interrupt vector.

It contains the address of the interrupt service procedure.

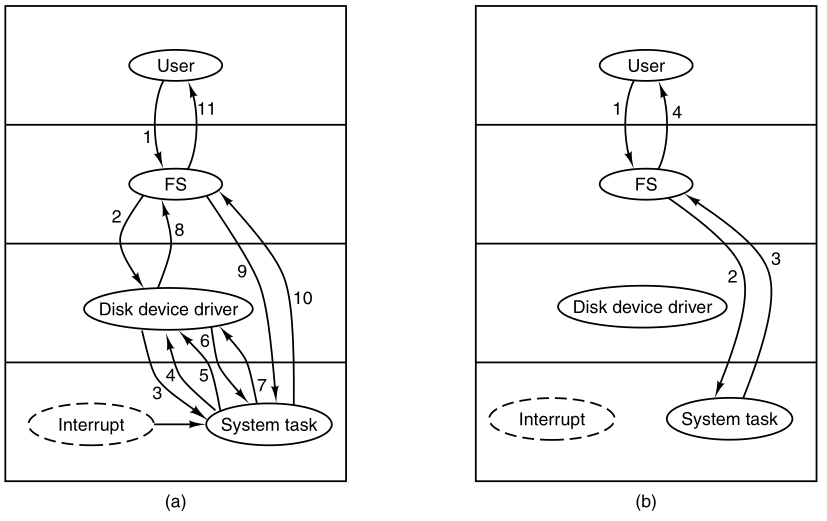

A user process transitions from running to

ready:

Suppose that a “user process” is in running state.

Another process, a “disk process” needs to access a disk.

Thus, a disk interrupt occurs from a “disk process”,

which is now in blocked state.

Interrupt hardware pushes registers to stack:

The program counter, program status word, and possibly one or more

registers,

are all pushed onto the (current) stack by the interrupt hardware.

On the stack, they may now be used by the interrupt service

procedure.

Interrupt service procedure stores “user process”

data:

The computer then jumps to the address specified in the disk interrupt

vector.

The interrupt service procedure saves all the registers,

in the process table entry for the current process.

The current process number and a pointer to its entry are kept,

in global variables so they can be found quickly.

Actions such as saving the registers and setting the stack

pointer,

cannot even be expressed in high-level languages such as C,

so those action are taken by a small assembly language routine.

Interrupt service procedure clears space for “disk

process”:

Then, the information deposited by the interrupt is removed from the

stack,

and the stack pointer is set to a temporary stack used by the process

handler.

Perform interrupt job:

When this data transition routine is finished,

it calls a C procedure to do the rest of the actual work,

for this specific interrupt type.

Message the “disk process” that interrupted the

CPU:

inter-process communication in MINIX3 is via messages.

The disk process is blocked waiting for a message.

Thus, the next step is to build a message to be sent to the disk

process.

The message says that an interrupt occurred,

to distinguish it from messages from user processes,

requesting disk blocks to be read, and things like that.

“Disk process” is now in ready state:

The state of the disk process is now changed from blocked to

ready,

and the scheduler is called.

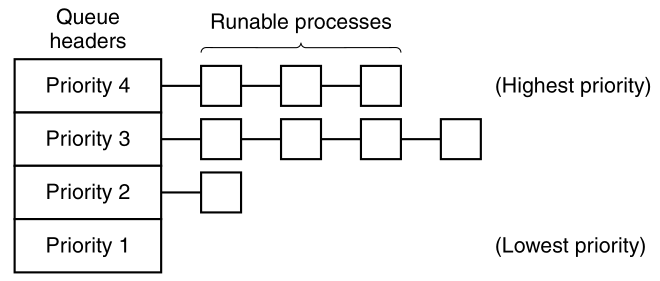

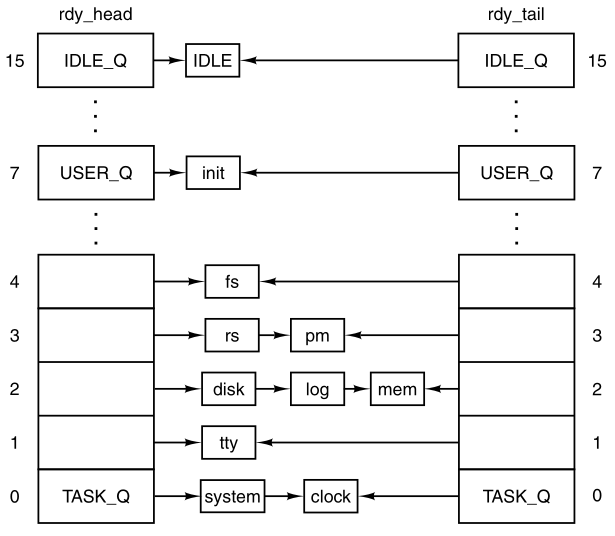

In MINIX3, different processes have different priorities,

to give better service to I/O device handlers than to user processes,

for example.

Schedule “user process” or “disk process:

If the disk process is now the highest priority runnable process,

it will be scheduled to run.

If the process that was interrupted is just as important, or more

so,

then it will be scheduled to run again,

and the disk process will have to wait a little while.

Data for current process copied back to central

storage:

Either way, the C procedure called by the assembly language interrupt

code now returns,

and the assembly language code loads up both the registers and memory

map,

for the now-current process, and starts it running.

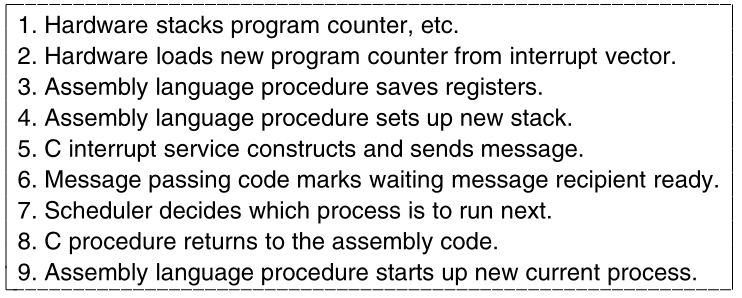

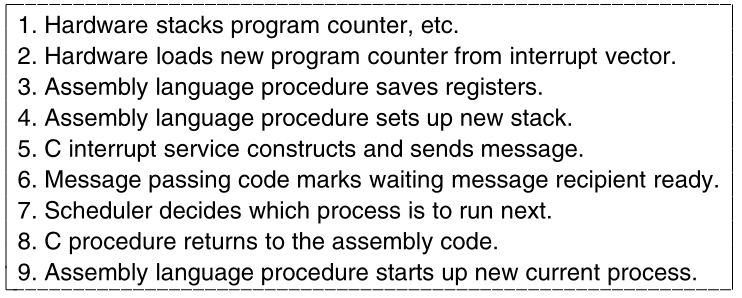

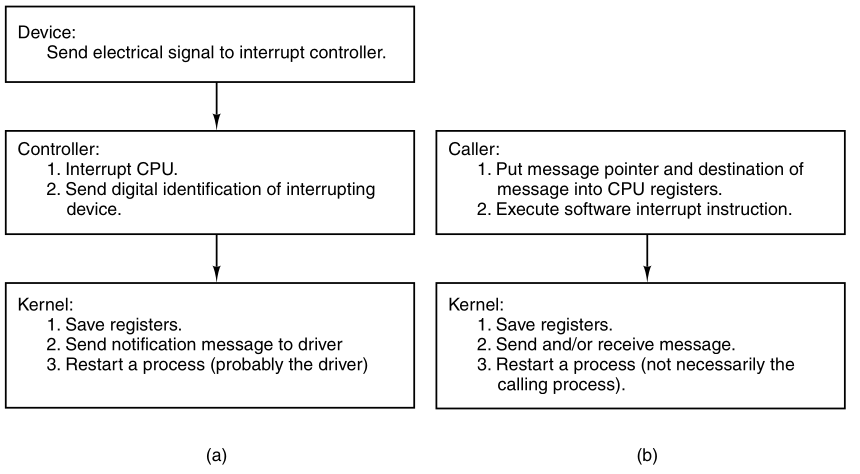

Interrupt handling and scheduling are summarized in the image

below.

This is what lowest level of the operating system does when an interrupt

occurs.

The details may vary slightly from system to system.

In traditional operating systems,

each process has an address space, and a single thread of control.

In fact, that is almost the definition of a process.

Sometimes we have multiple threads of control in the same address

space,

running in quasi-parallel,

as though they were separate processes

(except for the shared address space).

These threads of control are usually just called threads,

although some people call them lightweight processes.

A process can group related resources together.

A process has an address space containing:

program text, data, and other resources.

These resources may include open files, child processes,

pending alarms, signal handlers, accounting information, and more.

The other concept a process has is a thread of execution,

usually shortened to just thread.

Threads have their own register data and

stack:

The thread has a program counter register,

that keeps track of which instruction to execute next.

It is also known as the Instruction Pointer Register (RIP) (on

x86).

It also has other registers, which hold its current working

variables.

It has a stack, which contains the execution history,

with one frame for each procedure called but not yet returned from.

Although a thread must execute in some process,

the thread and its process are different concepts,

and can be treated separately.

Processes are used to group resources together.

Threads are the entities scheduled for execution on the CPU.

What threads add to the process model,

is to allow multiple executions to take place in the same process

environment,

to a large degree independent of one another.

This makes sharing data between threads easier and more efficient.

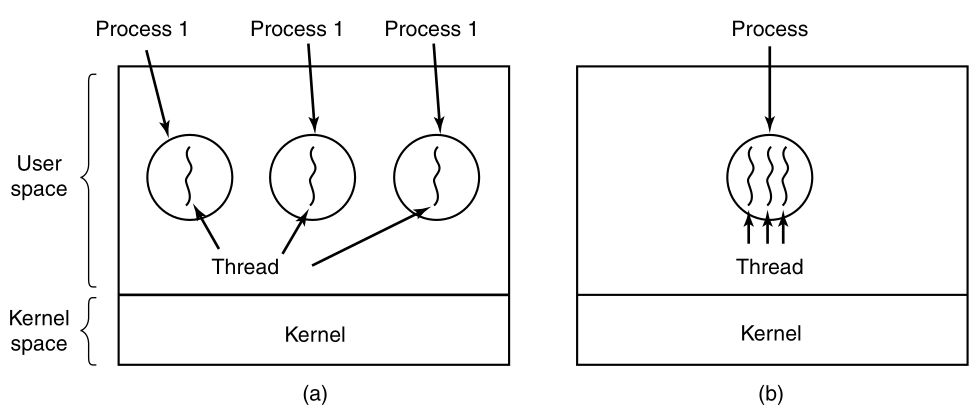

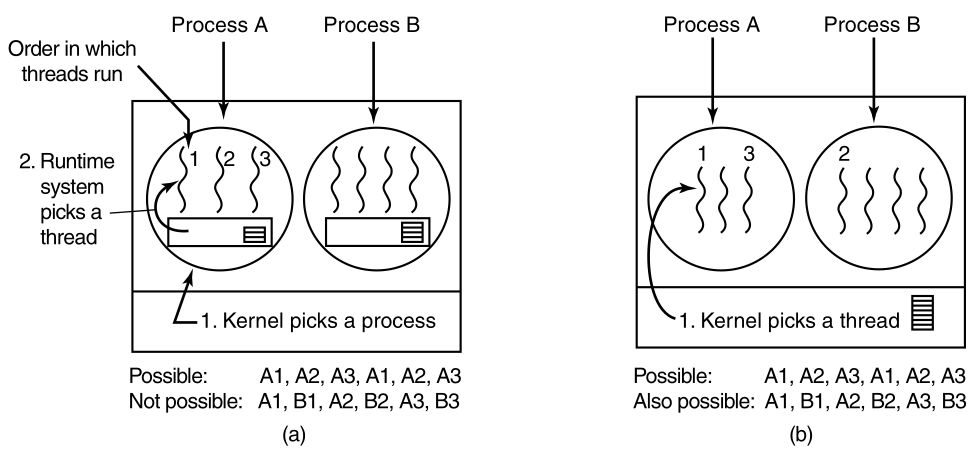

Traditional process versus multi-thread process:

(a) Three traditional processes each with one thread.

Each process has its own address space, and a single thread of

control.

(b) One single process, with three threads of control.

Although in both cases we have three threads,

in (a) each of them operates in a different address space,

whereas in (b) all three of them share the same address space.

In (b) the stacks will be sequentially organized in that address

space.

As an example of where multiple threads might be used,

consider a web browser process.

Many web pages contain multiple small images.

For each image on a web page,

the browser must set up a separate connection to the page’s home

site,

and request the image.

A great deal of time is spent establishing and releasing all these

connections.

By having multiple threads within the browser,

many images can be requested at the same time,

speeding up performance in most cases since with small images,

the set-up time is the limiting factor,

not the speed of the transmission line.

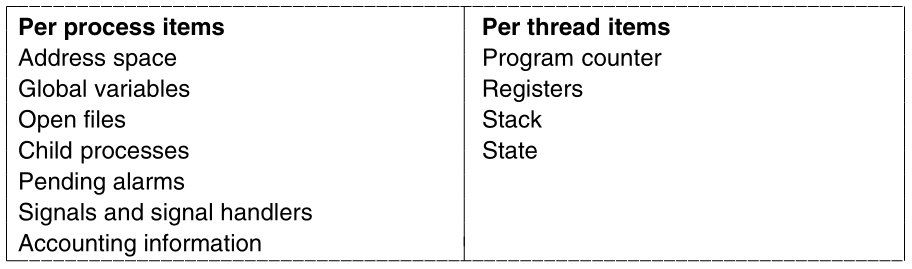

When multiple threads are present in the same address space,

a few of the fields of the process table we showed above,

are not actually per process,

but per thread, so a separate thread table is needed,

with one entry per thread.

Per-thread data:

Among the per-thread items are the:

program counter register (e.g., RIP), registers, and state.

The program counter is needed because threads,

like processes, can be suspended and resumed.

The registers are needed,

because when threads are suspended,

their registers must be saved.

Thread states:

Finally, threads, like processes, can be in:

running, ready, or blocked state.

The image below lists some per-process and per-thread items:

The first column lists some items shared by all threads in a

process.

The second one lists some items private to each thread.

+++++++++++++++++++ Cahoot-02-3

The OS can be in kernel or user space.

In some systems, the kernel is not aware of the threads.

They are managed entirely in user space.

When a thread is about to block,

it chooses and starts its successor, before stopping.

Several user-level threads packages were in common use,

including the POSIX P-threads and Mach C-threads packages.

Some kernels are aware of multiple threads per process,

so when a thread blocks, the kernel chooses the next one to run,

either from the same process or a different one.

To do scheduling, the kernel must have a thread table,

that lists all the threads in the system,

analogous to the process table.

Although these two alternatives may seem equivalent,

they differ considerably in performance.

Switching threads is much faster when thread management is done in

user space,

rather than when a system call is needed.

This fact argues strongly for doing thread management in user space.

On the other hand, when threads are managed entirely in user

space,

and one thread blocks

(e.g., waiting for I/O, or a page fault to be handled),

then the kernel blocks the entire process,

since it is not even aware that other threads exist.

This fact as well as others argue for doing thread management in the

kernel.

As a consequence, both systems are in use,

and various hybrid schemes have been proposed as well.

Whether threads are managed by the kernel or in user space,

they introduce problems that must be solved,

and which change the programming model appreciably.

Consider the effects of the fork system call.

If the parent process has multiple threads,

should the child also have them?

If not, the process may not function properly,

since all of them may be essential.

However, if the child process gets as many threads as the parent,

what happens if a thread was blocked on a read call,

for example, from the keyboard?

Are two threads now blocked on the keyboard?

When a line is typed, do both threads get a copy of it?

Only the parent?

Only the child?

The same problem exists with open network connections.

Another class of problems is related to the fact that:

threads share many data structures.

What happens if one thread closes a file,

while another one is still reading from it?

Suppose that one thread notices that there is too little

memory,

and starts allocating more memory.

Then, part way through, a thread switch occurs,

and the new thread also notices that there is too little memory,

and also starts allocating more memory.

Does the allocation happen once or twice?

In nearly all operating systems that were not designed with threads in

mind,

the libraries (such as the memory allocation procedure) are not

re-entrant,

and will crash if a second call is made while the first one is still

active.

https://en.wikipedia.org/wiki/Reentrancy_(computing)

A subroutine is called re-entrant,

if multiple invocations can safely run concurrently on multiple

processors,

or if on a single-processor system its execution can be

interrupted,

and a new execution of it can be safely started (it can be

“re-entered”).

Another problem relates to error reporting.

In UNIX, after a system call,

the status of the call is put into a global variable,

errno.

What happens if a thread makes a system call,

and before it is able to read errno,

another thread makes a system call,

wiping out the original value?

Some signals are logically thread specific; others are not.

For example, if a thread calls alarm,

it makes sense for the resulting response signal to go to the thread

that made the call.

When the kernel is aware of threads,

it can usually make sure the right thread gets the signal.

When the kernel is not aware of threads,

the threads package must keep track of alarms by itself.

An additional complication for user-level threads exists when (as in

UNIX),

a process may only have one alarm at a time pending,

and several threads call alarm independently.

Other signals, such as a keyboard-initiated SIGINT,

are not thread specific.

Who should catch them?

One designated thread?

All the threads?

A newly created thread?

Each of these solutions has problems.

What happens if one thread changes the signal handlers,

without telling other threads?

One last problem introduced by threads is stack management.

In many systems, when stack overflow occurs,

the kernel just provides more stack, automatically.

When a process has multiple threads,

it must also have multiple stacks.

If the kernel is not aware of all these stacks,

it cannot grow them automatically upon stack fault.

In fact, it may not even realize that a memory fault is related to stack

growth.

These problems are certainly not insurmountable.

However, just introducing threads into an existing system,

without a substantial system redesign, does not work.

The semantics of system calls have to be redefined,

and libraries have to be rewritten, at the very least.

And all of these modifications must be backward compatible with existing

programs,

for the limiting case of a process with only one thread.

Processes frequently need to communicate with other processes.

For example, in a shell pipeline,

the output of the first process must be passed to the second

process,

Further, pipelines can be chained.

There is a need for communication between processes,

preferably in a well-structured way, not using interrupts.

There are three issues here:

First,

How can one process pass information to another?

Second,

How can two or more processes not get into each other’s way,

when engaging in “critical” activities on shared resources?

For example, what if two processes each try to grab the last 1 MB of

memory?

Third,

When order dependencies are present,

how can the OS maintain proper sequencing?

If process A produces data, and process B prints it,

then B has to wait until A has produced some data,

before starting to print.

We will examine all three of these issues.

IPC for threads?

It is also important to mention that two of these issues apply equally

well to threads.

The first one, passing information, is easy for threads,

since they share a common address space.

Threads in different address spaces, that need to communicate,

fall under the category of communicating processes.

However, the other two,

keeping out of each other’s hair,

and proper order sequencing,

apply as well to threads.

The same problems exist and the same solutions apply.

Below we will discuss the problem in the context of processes,

but the same problems and solutions also apply to threads.

https://en.wikipedia.org/wiki/Race_condition

Processes that are working together may share some common

resource,

that each one can read and write.

The shared storage may be in main memory (possibly in a kernel data

structure)

or it may be a shared file on disk.

The location of the shared memory does not change the nature of the

communication,

or the problems that arise.

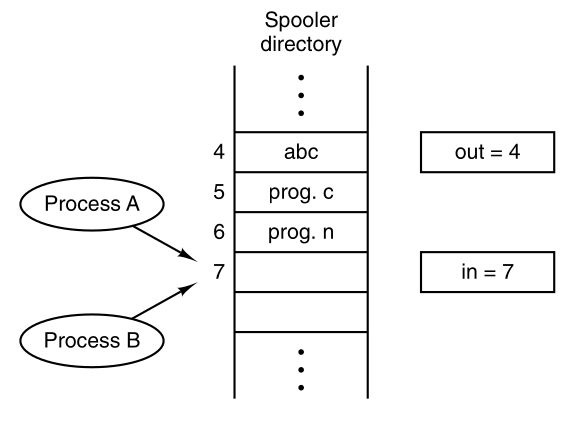

To see how inter-process communication works in practice,

let us consider a simple but common example, a print spooler.

When a process wants to print a file,

it enters the file name in a special spooler directory.

Another process, the printer daemon,

periodically checks to see if there are any files to be printed,

and if so, removes their names from the directory.

Imagine that our spooler directory has a large number of slots,

numbered 0, 1, 2, …, each one capable of holding a file name.

Also imagine that there are two shared variables,

out, which points to the next file to be printed, and

in, which points to the next free slot in the

directory.

These two variables might well be kept in a two-word file, available to

all processes.

At a certain instant, slots 0 to 3 are empty (the files have already

been printed),

and slots 4 to 6 are full (with the names of files to be printed).

More or less simultaneously,

processes A and B decide they want to queue a file for printing.

This is show below:

However, issues can occur, for example:

Process A reads in and stores the value, 7,

in a local variable called next_free_slot.

Just then, a clock interrupt occurs,

and the CPU decides that process A has run long enough,

so it switches to process B.

Process B also reads in, and also gets a 7,

so it stores the name of its file in slot 7,

and updates in to be an 8.

Then it goes off and does other things.

Eventually, process A runs again,

starting from the place it left off last time.

It looks at next_free_slot, finds a 7 there,

and writes its file name in slot 7,

erasing the name that process B just put there.

Then it computes next_free_slot + 1,

which is 8, and sets in to 8.

The spooler directory is now internally consistent,

so the printer daemon will not notice anything wrong,

but process B will never receive any output.

Situations like this,

where two or more processes are reading or writing some shared

data,

and the final result depends on who runs precisely when,

are called race conditions.

Debugging programs containing race conditions is no fun at all.

The results of most test runs are fine,

but rarely something weird and unexplained happens.

https://en.wikipedia.org/wiki/Mutual_exclusion

How do we avoid race conditions?

The key to preventing trouble here,

and in many other situations involving shared resources,

shared memory, shared files, and shared everything else,

is to prohibit concurrent access to shared resources,

prohibiting more than one process from reading and writing shared data

at the same time.

What we need is mutual exclusion.

If one process is using a shared variable or file,

the other processes should be excluded from doing the same.

The difficulty above occurred, because of concurrent shared

access:

Process B started using one of the shared variables,

before process A was finished with it.

We must choose appropriate primitive operations for achieving mutual

exclusion.

https://en.wikipedia.org/wiki/Critical_section

The problem of avoiding race conditions can be formulated

abstractly.

Part of the time, a process is busy doing computations on it’s own

data,

and other things that do not lead to race conditions.

However, sometimes a process may be accessing shared memory or

files.

There are parts of the program where the shared memory is

accessed.

These are called the critical regions or

critical sections.

Making sure two processes are ever in their critical regions at the same

time,

avoids race conditions.

This requirement of avoiding concurrent access to critical regions

avoids race conditions.

However, parallel processes can’t always cooperate correctly and

efficiently using shared data.

For efficiency, we want four conditions to hold,

to have a good solution:

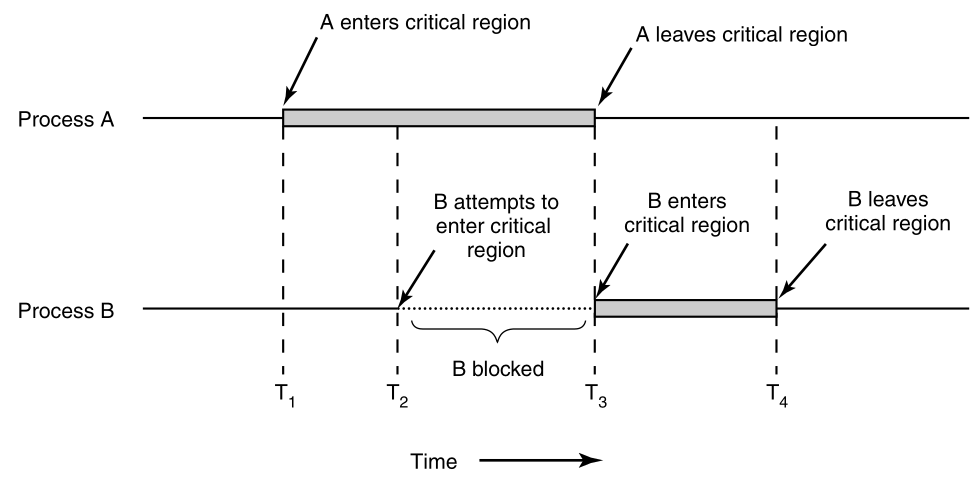

The behavior that we want is shown:

Here process A enters its critical region at time T1.

A little later, at time T2 process B attempts to enter its

critical region,

but fails because another process is already in its critical

region,

and we allow only one at a time.

Consequently, B is temporarily suspended until time T3,

when A leaves its critical region,

allowing B to enter immediately.

Eventually B leaves (at T4),

and we are back to the original situation,

with no processes in their critical regions.

+++++++++++++++++++ Cahoot-02-4

We now examine various proposals for achieving mutual

exclusion,

so that while one process is busy updating shared memory, in its

critical region,

no other process will enter its critical region and cause trouble.

Several mechanisms follow:

One simple solution is to have each process disable all

interrupts,

just after entering its critical region,

and re-enable them just before leaving it.

With interrupts disabled, no clock interrupts can occur.

The CPU is only switched from process to process,

as a result of clock or other interrupts.

With interrupts turned off,

the CPU will not be switched to another process.

Thus, once a process has disabled interrupts,

it can examine and update the shared memory,

without fear that any other process will intervene.

However, it is unwise to give user processes the power to turn off

interrupts.

Suppose that one of them did,

and then never turned them on again?

That could be the end of the system.

Further, if the system is a multiprocessor, with two or more

CPUs,

disabling interrupts affects only the CPU that executed the disable

instruction.

The other ones will continue running and can access the shared

memory.

The kernel itself can disable interrupts for a few

instructions,

while it is updating variables or lists.

Why?

For example,

if an interrupt occurred while the list of ready processes was in an

inconsistent state,

race conditions could occur.

Disabling interrupts is often a useful technique within the operating

system itself,

but is not appropriate as a general mutual exclusion mechanism for user

processes.

https://en.wikipedia.org/wiki/Lock_(computer_science)

As a second attempt, let us look for a software solution.

Consider having a single, shared, (lock) variable, initially 0.

When a process wants to enter its critical region,

it first tests the lock.

If the lock is 0,

then the process sets it to 1,

and enters the critical region.

If the lock is already 1,

then the process just waits until it becomes 0.

Lock of 0 means that no process is in its critical region,

and a 1 means that some process is in its critical region.

Unfortunately, this idea contains the same fatal flaw,

which we saw in the spooler directory example above.

Suppose that one process reads the lock, and sees that it is 0.

Before it can set the lock to 1,

another process is scheduled, runs, and sets the lock to 1.

When the first process runs again,

it will also set the lock to 1,

and two processes will be in their critical regions at the same

time.

Even first reading out the lock value,

and checking it again just before storing into it,

does not help.

The race now occurs if the second process modifies the lock,

just after the first process has finished its second check.

A third approach to the mutual exclusion problem is shown below:

while (TRUE) {

while (turn != 0) /* empty loop */ ;

critical_region();

turn = 1;

noncritical_region();

}while (TRUE) {

while (turn != 1) /* empty loop */ ;

critical_region();

turn = 0;

noncritical_region();

}In both cases, be sure to note the semicolons terminating the while

statements.

In the code above, the integer variable turn, initially

0,

keeps track of whose turn it is, to enter the critical region,

and examining or updating the shared memory.

Initially, process 0 inspects turn, finds it to be 0,

and enters its critical region.

Process 1 also finds it to be 0,

and therefore sits in a tight loop,

continually testing turn to see when it becomes 1.

Side note:

Continuously testing a variable until some value appears is called

busy waiting.

It should usually be avoided, since it wastes CPU time.

Only when the wait will be short is busy waiting usually used.

A lock that uses busy waiting is called a spin

lock.

When process 0 leaves the critical region, it sets turn to 1,

to allow process 1 to enter its critical region.

Suppose that process 1 finishes its critical region quickly,

so both processes are in their noncritical regions,

with turn set to 0.

Now process 0 executes its whole loop quickly,

exiting its critical region and setting turn to 1.

At this point turn is 1,

and both processes are executing in their noncritical regions.

Suddenly, process 0 finishes its noncritical region,

and goes back to the top of its loop.

Unfortunately, it is not permitted to enter its critical region

now,

because turn is 1 and process 1 is busy with its noncritical

region.

It hangs in its while loop until process 1 sets turn to 0.

When one of the processes is much slower than the other,

taking turns is not good for efficiency.

This situation violates condition 3 set out above:

process 0 is being blocked by a process not in its critical region.

Going back to the spooler directory discussed above,

if we now associate the critical region with reading and writing the

spooler directory,

process 0 would not be allowed to print another file,

because process 1 was doing something else.

This solution requires that the two processes strictly

alternate,

in entering their critical regions.

For example, in spooling files,

neither one would be permitted to spool two in a row.

While this algorithm does avoid all races,

it is not really a serious candidate as a solution,

because it violates condition 3 and is bad for efficiency.

In 1981, GL Peterson discovered a simpler way to achieve mutual

exclusion,

This algorithm consists of two procedures written in ANSI C,

which means that function prototypes should be supplied,

for all the functions defined and used.

To save space, we will not show the prototypes in this or subsequent

examples.

#define FALSE 0

#define TRUE 1

#define N 2 /* number of processes */

int turn; /* whose turn is it? */

int interested[N]; /* all values initially 0 (FALSE) */

void enter_region(int process) { /* process is 0 or 1 */

int other; /* number of the other process */

other = 1 - process; /* the opposite of process */

interested[process] = TRUE; /* show that you are interested */

turn = process; /* set flag */

while (turn == process && interested[other] == TRUE) /* spin */ ;

}

void leave_region(int process) { /* process: who is leaving */

interested[process] = FALSE; /* indicate departure from critical region */

}Before using the shared variables

(i.e., before entering its critical region),

each process calls enter_region(process_number),

with its own process number, 0 or 1, as the

parameter.

This call will cause it to wait, if need be, until it is safe to

enter.

After it has finished with the shared variables,

the process calls leave_region(process_number) to indicate

that it is done,

and to allow the other process to enter, if it so desires.

Initially, neither process is in its critical region:

Now process 0 calls enter_region.

0 indicates its interest by setting its array

element,

and sets turn to 0.

Since process 1 is not interested,

enter_region returns immediately.

If process 1 now calls enter_region,

1 will hang there until interested[0] goes to

FALSE,

an event that only happens when process 0 calls

leave_region,

to exit the critical region.

Now consider the case that both processes call

enter_region almost simultaneously.

Both will store their process number in turn.

The first one is lost (overwritten).

Suppose that process 0 is first,

and 1 stores afterwards, so turn is

1.

When both processes come to the while statement,

process 0 executes it zero times and enters its critical

region.

Process 1 loops, and does not enter its critical

region.

Now let us look at another proposal,

that requires a little help from the hardware.

Many computers, including with multiple processors in mind,

have an extra assembly instruction provided by the architecture:

TSL RX,LOCK

(Test and Set Lock) that works as follows:

it reads the contents of the memory word LOCK into register

RX,

and then stores a nonzero value at the memory address

LOCK.

Both operations of reading the word, and storing into it,

are guaranteed to be indivisible (executed together).

No other processor can access the memory word,

until the instruction is finished.

The CPU executing the TSL instruction, locks the memory

bus,

to prohibit other CPUs from accessing memory until it is done.

To use the TSL instruction, we will use a shared variable,

LOCK, to coordinate access to shared memory.

When LOCK is 0,

any process may set it to 1 using the TSL

instruction,

and then read or write the shared memory.

When it is done, the process sets LOCK back to

0,

using an ordinary move instruction.

How can this instruction be used,

to prevent two processes from simultaneously entering their critical

regions?

Entering and leaving a critical region using the TSL instructions in assembly pseudocode:

enter_region:

TSL REGISTER,LOCK | copy LOCK to register, and set LOCK to 1

CMP REGISTER,#0 | was LOCK zero?

JNE ENTER_REGION | if it was non zero, then LOCK was set, so loop

RET | return to caller; critical region entered

leave_region:

MOVE LOCK,#0 | store a 0 in LOCK

RET | return to callerThe user must use these functions correctly,

before and after entering and leaving critical regions.

If they do not, for whatever reason,

race conditions can still occur.

The first instruction copies the old value of LOCK to

the register,

and then sets LOCK to 1.

Then the old value is compared with 0.

If it is nonzero, the lock was already set,

so the program just goes back to the beginning,

and tests it again.

When the process currently in its critical region,

is done with its critical region,

the LOCK will become 0

and the subroutine returns, with the lock set.

Clearing the lock is simple.

The program just stores a 0 in LOCK.

No special instructions are needed.

One solution to the critical region problem is now

straightforward.

Before entering its critical region,

a process calls enter_region,

which does busy waiting until the LOCK is free;

then it acquires the lock and returns.

After the critical region, the process calls

leave_region,

which stores a 0 in LOCK.

As with all solutions based on critical regions,

for the method to work,

the processes must call enter_region and

leave_region at the correct times.

If a process cheats, the mutual exclusion will fail.

Both Peterson’s solution, and TSL, are correct.

However, both are inefficient, because they requiring busy waiting.

In essence, what these solutions do is this:

When a process wants to enter its critical region,

it checks to see if the entry is allowed.

If it is not, the process just spins in a tight loop waiting until it

is.

Not only does this approach waste CPU time,

but it can also have unexpected effects.

https://en.wikipedia.org/wiki/Priority_inversion

Consider a computer with two processes,

H, with high priority and

L, with low priority,

which share a critical region.

The scheduling rules specify that:

H is run whenever it is in ready state.

At a certain moment, with L in its critical

region,

H becomes ready to run

(e.g., an I/O operation completes).

H now begins busy waiting,

but since L is never scheduled while H is

running,

L never gets the chance to leave its critical

region,

so H loops forever.

This situation is sometimes referred to as the priority inversion problem.

Now let us look at some inter-process communication primitives,

that block and wait, instead of wasting CPU time,

when they are not allowed to enter their critical regions.

One of the simplest is the pair, sleep and

wakeup.

sleep is a system call that causes the caller to

block,

that is, be suspended until another process wakes it up.

The wakeup system call has one parameter,

the process to be awakened.

Alternatively, both sleep and wakeup can

each have one parameter,

a memory address used to match up sleeps with wakeups.

https://en.wikipedia.org/wiki/Producer-consumer_problem

This can be considered an abstract model of IPC,

recall the problem of sequencing mentioned above.

As an example of how these primitives can be used in practice,

let us consider the producer-consumer problem

(also known as the bounded buffer problem).

Two processes share a common, fixed-size buffer.

One of them, the producer, puts information into the buffer,

and the other one, the consumer, takes it out.

It is also possible to generalize the problem,

to have m producers and n consumers,

but we will only consider the case of one producer and one

consumer,

This assumption simplifies the solutions.

Trouble arises when the producer wants to put a new item in the

buffer,

but the buffer is already full.

The solution is for the producer to go to sleep,

to be awakened when the consumer has removed one or more items.

Similarly, if the consumer wants to remove an item from the

buffer,

and sees that the buffer is empty,

then it goes to sleep until the producer puts something in the

buffer,

and wakes it up.

This approach sounds simple enough,

but it leads to the same kinds of race conditions as earlier,

with the spooler directory.

To keep track of the number of items in the buffer,

we will need a variable, count.

If the maximum number of items the buffer can hold is N,

then the producer’s code will first test to see if count is

N.

If it is, then the producer will go to sleep;

if it is not, then the producer will add an item,

and increment count.

The consumer’s code is similar:

first test count, to see if it is 0.

If it is, go to sleep;

if it is nonzero, remove an item,

and decrement the counter.

Each of the processes also tests to see if the other should be

sleeping,

and if not, wakes it up.

The code for both producer and consumer is shown below:

The problem is the same,

two operations in a critical region,

which can be multi-tasked on by the CPU.

Thus, this producer-consumer solution below also has a fatal race

condition:

#define N 100 /* number of slots in the buffer */

int count = 0; /* number of items in the buffer */

void producer(void) {

int item;

while (TRUE) { /* repeat forever */

item = produce_item(); /* generate next item */

if (count == N) sleep(); /* if buffer is full, go to sleep */

insert_item(item); /* put item in buffer */

count = count + 1; /* increment count of items in buffer */

if (count == 1) wakeup(consumer); /* was buffer empty? */

}

}

void consumer(void) {

int item;

while (TRUE) { /* repeat forever */

if (count == 0) sleep(); /* if buffer is empty, got to sleep */

item = remove_item(); /* take item out of buffer */

count = count - 1; /* decrement count of items in buffer */

if (count == N - 1) wakeup(producer); /* was buffer full? */

consume_item(item); /* print item */

}

}To express system calls such as sleep and

wakeup in C,

we will show them as calls to library routines.

They are not part of the standard C library,

but presumably would be available on any system that actually had these

system calls.

The procedures enter_item and

remove_item,

definitions of which are not shown,

handle the bookkeeping of putting items into the buffer,

and taking items out of the buffer.

The race condition can occur,

because access to count is unconstrained.

The buffer is empty,

and the consumer has just read count, to see

if it is 0.

At that instant,

the scheduler decides to stop running the consumer,

and start running the producer.

The producer enters an item in the buffer,

increments count, and notices that it is now 1.

Reasoning that count was just 0,

and thus the consumer must be sleeping,

the producer calls wakeup to wake the

consumer up.

Unfortunately, the consumer is not yet logically asleep,

so the wakeup signal is lost.

When the consumer next runs,

it will test the value of count it previously read,

find it to be 0, and go to sleep.

Sooner or later the producer will fill up the buffer,

and also go to sleep.

Both will sleep forever.

A wakeup sent to a process,

that is not (yet) sleeping, is lost.

If it were not lost, then it would work.

A quick fix is to modify the rules,

to add a wakeup_waiting_bit to the picture.

When a wakeup is sent to a running process,

that is still awake, this bit is set.

Later, when the process tries to go to sleep,

if the wakeup_waiting_bit is on,

then it will be turned off,

but the process will stay awake.

The wakeup_waiting_bit is a piggy bank for

wakeup signals.

While this saves the day in this simple example,

it is easy to construct examples with three or more processes,

in which one wakeup_waiting_bit is insufficient.

We could make another patch, and add a second,

wakeup_waiting_bit2,

or maybe 8 or 32 of them, but in principle the problem is still

there…

https://en.wikipedia.org/wiki/Semaphore_(programming)

Dijkstra (1965) suggested using an integer variable,

to count the number of wakeups, saved for future use.

He named such an integer a semaphore.

A semaphore could have the value 0,

indicating that no wakeups were saved,

or some positive value,

if one or more wakeups were pending.

Dijkstra proposed defining two multi-part operations,

down and up

(which are generalizations of sleep and

wakeup, respectively).

down

The down operation on a semaphore checks if the value is greater than

0.

If so, it decrements the value (i.e., consumes one stored wakeup)

and just continues.

If the value is 0, then the process is put to sleep,

without completing the down operation.

This happens later, by a different process.

Checking the value, changing it, and possibly going to sleep,

must all done as a single, indivisible, atomic action.

It must be guaranteed that once a semaphore operation has started,

no other process can access the semaphore,

until the operation has completed or blocked.

In solving synchronization problems and avoiding race conditions,

This atomicity is absolutely essential.

up

The up operation increments the value of the semaphore addressed.

If one or more processes were sleeping on that semaphore,

unable to complete an earlier down operation,

one of them is chosen by the system (e.g., at random),

and is allowed to complete its down.

Thus, after an up on a semaphore, with processes sleeping

on it,

the semaphore will still be 0,

but there will be one fewer process sleeping on it.

The operation of incrementing the semaphore,

and waking up one process, must also be indivisible.

No process must ever block doing an up operation,

just as in the earlier model,

where no process ever blocks when doing a wakeup.

As an aside, in Dijkstra’s original paper,

he used the names p and v instead of down and up, respectively,

but since these have no mnemonic significance to people who do not speak

Dutch

(and only marginal significance to those who do),

we will use the names down and up instead.

It is essential that they be implemented in an indivisible way.

The normal way is to implement up and down as

system calls,

with the operating system briefly disabling all interrupts while it

is:

testing the semaphore,

updating it,

and if necessary, putting the process to sleep.

Since these several actions take only a few instructions,

no harm is done in disabling interrupts.

If multiple CPUs are being used,

each semaphore itself should be protected by a lock variable,

with the TSL instruction used,

to make sure that only one CPU at a time examines the semaphore.

We use TSL to prevent several CPUs from accessing the

semaphore at the same time.

This is quite different from a spin lock,

busy waiting by the producer for space in the buffer,

or consumer waiting, for the other to empty or load the buffer.

The distinction is in the duration of time.

The semaphore operation only takes a few microseconds,

whereas the producer or consumer might take arbitrarily long.

The multiple operations in up and down

themselves must be indivisible.

We use TSL to accomplish this, as above.

Below, we do not show those implementation of up and

down,

but assume they are correct, and apply them.

The code below illustrates two ways up and down can be used.

First, two semaphores are used for the producer-consumer problem.

Second, up and down with a binary lock can

be used to efficiently protect regions,

that themselves are critical, but may take longer than a

semaphore,

in which case, we may call it a mutex instead.

#define N 100 /* number of slots in the buffer */

typedef int semaphore; /* semaphores are a special kind of int */

semaphore mutex = 1; /* controls access to critical region */

semaphore empty = N; /* counts empty buffer slots */

semaphore full = 0; /* counts full buffer slots */

void producer(void) {

int item;

while (TRUE) { /* TRUE is the constant 1 */

item = produce_item(); /* generate something to put in buffer */

down(&empty); /* decrement empty count */

down(&mutex); /* enter critical region */

insert_item(item); /* put new item in buffer */

up(&mutex); /* leave critical region */

up(&full); /* increment count of full slots */

}

}

void consumer(void) {

int item;

while (TRUE) { /* infinite loop */

down(&full); /* decrement full count */

down(&mutex); /* enter critical region */

item = remove_item(); /* take item from buffer */

up(&mutex); /* leave critical region */

up(&empty); /* increment count of empty slots */

consume_item(item); /* do something with the item */

}

}Reminder: this is not the implementation of the semaphore

itself,

but the utilization of it for a similar purpose.

This solution uses three indivisible integers,

one called full,

for counting the number of slots that are full,

one called empty,

for counting the number of slots that are empty,

and one called mutex,

to make sure the producer and consumer do not access the buffer at the

same time.

up and down as semaphores guarantees their

updates to these ints are indivisible.

full is initially 0,

empty is initially equal to the number of slots in the

buffer,

and mutex is initially 1.

Binary semaphores are initialized to 1,

and used by two or more processes,

to ensure that only one of them can enter its critical region at the

same time.

If each process does a down operation just before entering

its critical region,

and an up just after leaving it,

then mutual exclusion to the shared data is guaranteed.

Producer consumer as a model of IPC

Now that we have a good inter-process communication primitive at our

disposal,

recall the example interrupt sequence we covered above,

Generalizing previous disk access interrupt example above:

In a system using semaphores,

the natural way to hide interrupts is to have a mutex semaphore,

initially set to 0, associated with each I/O device.

Just after starting an I/O device,

the managing process does a down operation on the

associated semaphore,

thus blocking immediately.

When the interrupt comes in,

the interrupt handler then does an up operation on the

associated semaphore,

which makes the relevant process ready to run again.

Step 6 in the image above,

consists of doing an up on the device’s semaphore,

so that in step 7 the scheduler will be able to run the device

manager.

If several processes are now ready,

then the scheduler may choose to run an even more important process

next.

We will look at how scheduling is done later in this chapter.

In the example code above,

we have actually used semaphores in two different ways.

This difference is important to make explicit.

Synchronization:

One use of semaphores is for synchronization.

Both the full and empty semaphores are needed,

to guarantee that certain event sequences do or do not occur:

They ensure that the producer stops running when the buffer is

full,

and the consumer stops running when it is empty.

Second, use of semaphores for mutual exclusion,

is different.

Mutual exclusion:

The mutex semaphore is used for accomplishing efficient

mutual exclusion.

It is designed to guarantee that only one process at a time,

will be reading or writing shared data,

the buffer, and the associated variables.

This mutual exclusion is required to prevent chaos,

caused by concurrent editing of a shared resource.

https://en.wikipedia.org/wiki/Lock_(computer_science)

When the semaphore’s ability to count is not needed,

a simplified version of the semaphore is called a mutex.

Mutexes are good only for managing mutual exclusion,

to some shared resource or piece of code.

They are easy and efficient to implement,

which makes them especially useful,

They are often used in non-kernel thread packages,

that are implemented entirely in user space.

A mutex is a variable that can be in one of two states:

unlocked or locked.

Consequently, only 1 bit is required to represent it,

but in practice, an integer often is used,

with 0 meaning unlocked,

and all other values meaning locked.

Two procedures are used with mutexes.

lock

When a process (or thread) needs access to a critical region,

it calls mutex_lock.

If the mutex is currently unlocked,

meaning that the critical region is available,

the call succeeds,

and the calling thread is free to enter the critical region.

unlock

If the mutex is already locked,

then the caller is blocked,

until the process in the critical region is finished,

and calls mutex_unlock.

If multiple processes are blocked on the mutex,

then one of them is chosen at random,

and allowed to acquire the lock.

Both lock and unlock operations may be

implemented with TSL.

But, they are different than TSL itself,

because they add the feature of blocking/sleeping/suspending.

https://en.wikipedia.org/wiki/Monitor_(synchronization)

Deadlocks

When programming the above example,

It is easy to make mistakes.

In the semaphore code above,

look closely at the order of the down calls,

before entering or removing items from the buffer.

Suppose that the two down calls in the producer’s code were

reversed in order,

so mutex was decremented before empty, instead of after it.

If the buffer were completely full,

then the producer would block,

with mutex set to 0.

Consequently, the next time the consumer tried to access the

buffer,

it would do a down on mutex, now 0, and block

too.

Both processes would stay blocked forever,

and no more work would ever be done.

This unfortunate situation is called a deadlock.

We will study deadlocks later!

This problem is pointed out,

to show how careful you must be when using semaphores.

One subtle error, and everything comes to a grinding halt.

It is like programming in assembly language,

only worse, because the errors are race conditions, deadlocks,

and other forms of unpredictable and irreproducible behavior.

Monitors:

To make it easier to write correct programs,

we can use higher level synchronization primitive called a monitor.

A monitor is a collection of procedures, variables, and data

structures,

that are all grouped together in a special kind of module or

package.

Processes may call the procedures in a monitor whenever they want

to,

but they cannot directly access the monitor’s internal data

structures,

from procedures declared outside the monitor.

Below, we illustrate a monitor,

written in an imaginary language, Pidgin Pascal:

monitor example

integer i;

condition c;

procedure producer(x);

.

.

.

end;

procedure consumer(x);

.

.

.

end;

end monitor;Monitors have a key property that makes them useful for achieving

mutual exclusion:

only one process can be active in a monitor at any instant.

Monitors are a programming language construct,

so the compiler knows they are special,

and can handle calls to monitor procedures,

differently from other procedure calls.

Typically, when a process calls a monitor procedure,

the first few instructions of the procedure will perform a check,

to see if any other process is currently active within the

monitor.

If so, the calling process will be suspended,

until the other process has left the monitor.

If no other process is using the monitor,

the calling process may enter.

The compiler implements the mutual exclusion on monitor

entries.

A common way is to use a mutex or binary semaphore.

However, because the compiler, not the programmer,

arranges for the mutual exclusion,

it is much less likely that something will go wrong.

By merely turning all the critical regions into monitor

procedures,

no two processes will ever execute their critical regions at the same

time.

Efficiency

Although monitors provide an easy way to achieve mutual exclusion,

as we have seen above, that is not enough for efficiency.

We also need a way for processes to block, when they cannot

proceed.

In the producer-consumer problem,

it is easy enough to put all the tests for buffer-full and buffer-empty

in monitor procedures,

but how should the producer block, when it finds the buffer full?

Wait and Signal

The solution is to have:

condition variables,

and two operations on them, wait and

signal.

When a monitor procedure discovers that it cannot continue

(e.g., the producer finds the buffer full),

then it does a wait on some condition variable, say,

full.

This action causes the calling process to block.

Another process that had been previously prohibited from entering the

monitor,

is now allowed to enter it.

This other process, for example, the consumer,

can wake up its sleeping partner,

by sending a signal on the condition variable that its partner is

waiting on.

To avoid having two active processes in the monitor at the same

time,

we need a rule telling what happens after a signal.

One solution is to let the newly awakened process run,

suspending the other one.

A second solution requires any process sending a signal to exit the

monitor immediately.

A signal statement may appear only as the final statement in a monitor

procedure.

This proposal is conceptually simpler,

and is also easier to implement.

If a signal is sent on a condition variable,

on which several processes are waiting,

only one of them, determined by the system scheduler, is revived.

There is also a third solution,

This is to let the signaler continue to run,

and only after the signaler has exited the monitor,

then allow the waiting process to start running,

Condition variables are not counters.

They do not accumulate signals for later use the way semaphores

do.

If a condition variable is signaled with no one waiting on it,

then the signal is lost.

The wait must come before the signal.

This rule makes the implementation much simpler.

To accommodate for lost signals,

we keep track of the state of each process with variables, if need

be.

A process that might otherwise send a signal,

can see that this operation is not necessary,

by looking at the variables.

A skeleton of the producer-consumer problem with monitors is shown

below.

Only one monitor procedure at a time is active.

The buffer has N slots.

monitor ProducerConsumer

condition full, empty;

integer count;

procedure insert(item: integer);

begin

if count = N then wait(full);

insert_item(item);

count := count + 1;

if count = 1 then signal(empty)

end;

procedure remove: integer;

begin

if count = 0 then wait(empty);

remove = remove_item;

count := count 1;

if count = N 1 then signal(full)

end;

count := 0;

end monitor;

procedure producer;

begin

while true do

begin

item = produce_item;

ProducerConsumer.insert(item)

end

end;

procedure consumer;

begin

while true do

begin

item = ProducerConsumer.remove;

consume_item(item)

end

end;Operations wait and signal look similar to

sleep and wakeup,

which we saw earlier had possible fatal race conditions.

These now have one crucial difference:

sleep and wakeup failed because while one

process was trying to go to sleep,

the other one was trying to wake it up.

With monitors, that cannot happen.

The automatic mutual exclusion on monitor procedures guarantees

that,

if the producer inside a monitor procedure discovers that the buffer is

full,

it will be able to complete the wait operation,

without having to worry about the possibility that,

the scheduler may switch to the consumer just before the

wait completes.

The consumer will not even be let into the monitor at all,

until the wait is finished and the producer is marked as no longer

runnable.

Although Pidgin Pascal is an imaginary language,

some real programming languages also support monitors.

One such language is Java.

Java supports user-level threads,

and also allows methods (procedures) to be grouped together into

classes.

By adding the keyword synchronized to a method

declaration,

Java guarantees that once any thread has started executing that

method,

no other thread will be allowed to start executing any other

synchronized method in that class.

synchronized methods in Java differ from classical