https://www.reddit.com/r/ProgrammerHumor/comments/a2c4gg/quality_assurance/

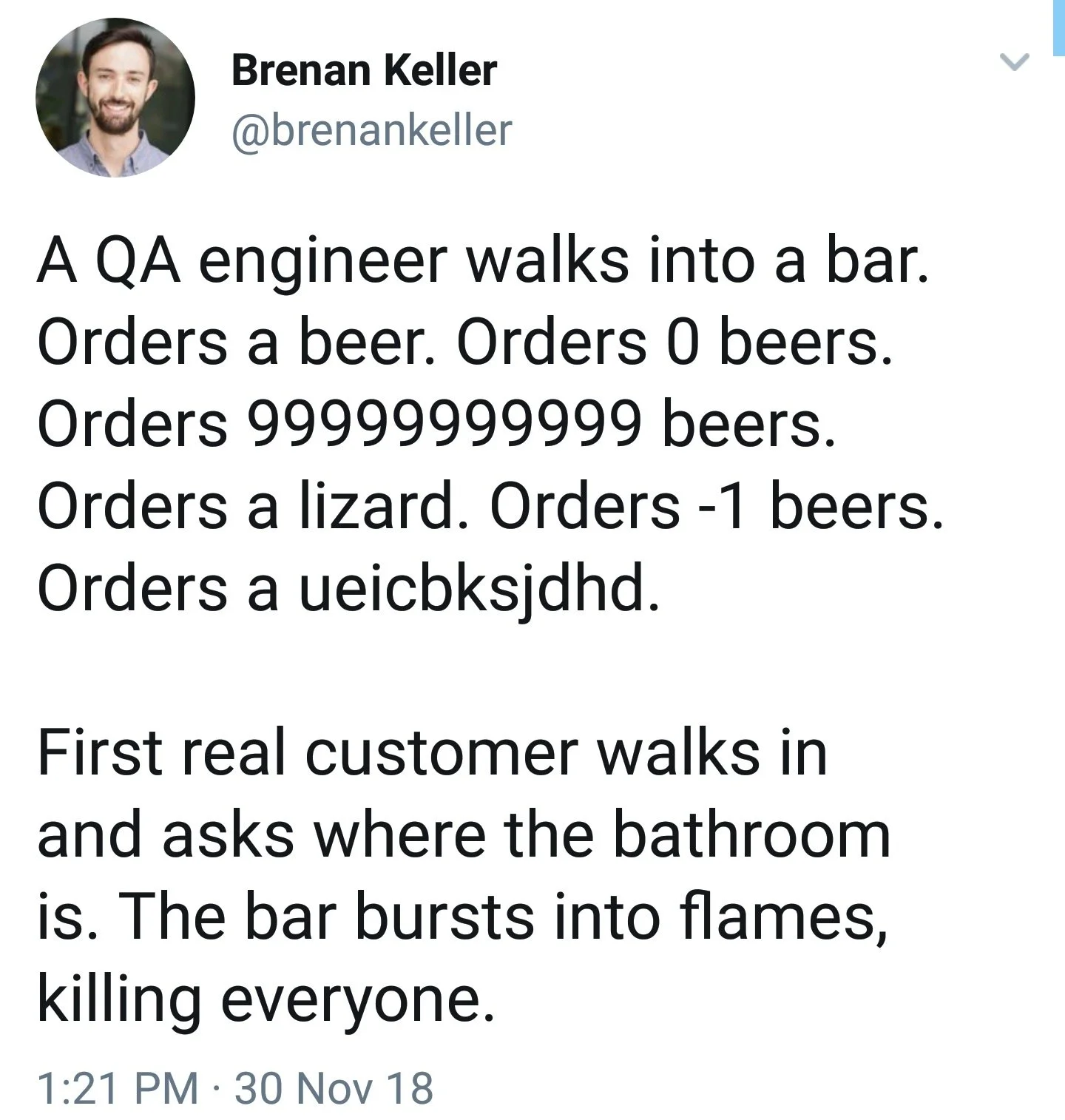

If you like to break things (mostly software), then QA or software testing may be a good job for you.

The top r/ProgrammerHumor post of all time… QA stands for Quality

Assurance.

https://www.reddit.com/r/ProgrammerHumor/comments/a2c4gg/quality_assurance/

If you like to break things (mostly software), then QA or software

testing may be a good job for you.

document.querySelector('video').playbackRate = 1.2https://en.wikipedia.org/wiki/Test-driven_development

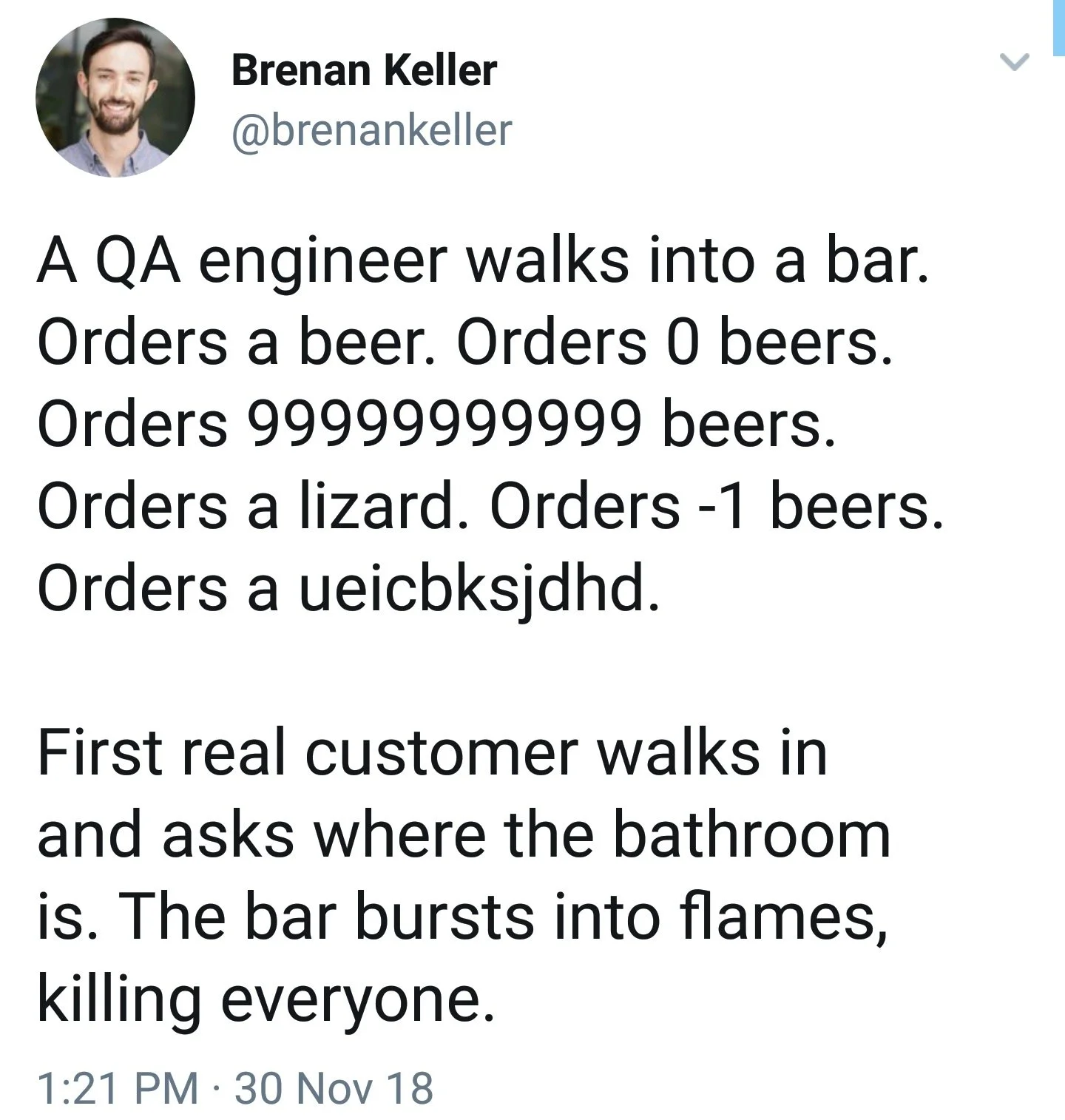

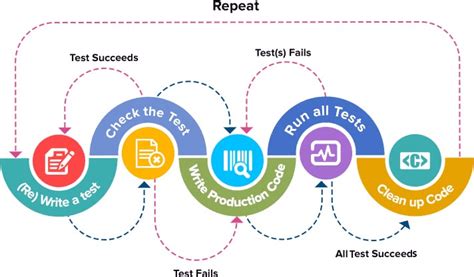

Note: the Test fails/succeeds logic above might seem

backwards, but it’s not!

Note:

* Some people complain TDD is not realistic.

* Doing it for open-ended code that does not exist yet is not!

* Realistic TDD starts with a base of functioning code, then you write

tests, and the TDD part picks up mostly for adding new features, not for

writing tests before any code exists at all…

* This is a very common situation!

1. Add a test

* In test-driven development, each new feature begins with writing a

test.

* Write a test that defines a function, or improvements of a function,

which should be very succinct.

* To write a test, the developer must clearly understand the feature’s

specification and requirements.

* The developer can accomplish this through use cases and user stories

to cover the requirements and exception conditions, and can write the

test in whatever testing framework is appropriate to the software

environment.

* It could be a modified version of an existing test.

* This is a differentiating feature of test-driven development, versus

writing unit tests after the code is written:

* it makes the developer focus on the requirements before writing the

code for the feature addition, a subtle but important difference.

2. Run all tests and see if the new test fails (it

should)

* This validates that the test harness is working correctly, shows that

the new test does not pass without requiring new code because the

required behavior already exists, and it rules out the possibility that

the new test is flawed and will always pass.

* The new test should fail for the expected reason (it’s not implemented

yet…).

* This step increases the developer’s confidence in the new test.

3. Write the code

* The next step is to write some code that causes the test to

pass.

* The new code written at this stage is not perfect and may, for

example, pass the test in an inelegant way.

* That is acceptable, because it will be improved and honed in Step

5.

* At this point, the only purpose of the written code is to pass the

test.

* The programmer must not write code that is beyond the functionality

that the test checks.

4. Run tests

* If all test cases now pass, the programmer can be confident that the

new code meets the test requirements, and does not break or degrade any

existing features.

* If they do not, the new code must be adjusted until they do.

5. Re-factor code

* The growing code base must be cleaned up regularly during test-driven

development.

* New code can be moved from where it was convenient for passing a test,

to where it more logically belongs.

* Duplication must be removed.

* Object, class, module, variable, and method names should clearly

represent their current purpose and use, as extra functionality is

added.

* As features are added, method bodies can get longer and other objects

larger.

* They benefit from being split and their parts carefully named to

improve readability and maintainability, which will be increasingly

valuable later in the software life cycle.

* Inheritance hierarchies may be rearranged to be more logical and

helpful, and perhaps to benefit from recognized design patterns.

* There are specific and general guidelines for re-factoring and for

creating clean code.

* By continually re-running the test cases throughout each re-factoring

phase, the developer can be confident that process is not altering any

existing functionality.

* The concept of removing duplication is an important aspect of any

software design.

* In this case, it also applies to the removal of any duplication

between the test code and the production code, for example magic numbers

or strings repeated in both to make the test pass in Step 3.

Repeat

* Starting with another new test, the cycle is then repeated to push

forward the functionality.

* The size of the steps should always be small, with as few as 1-10

edits between each test run.

* If new code does not rapidly satisfy a new test, or other tests fail

unexpectedly, the programmer should undo or revert in preference to

excessive debugging.

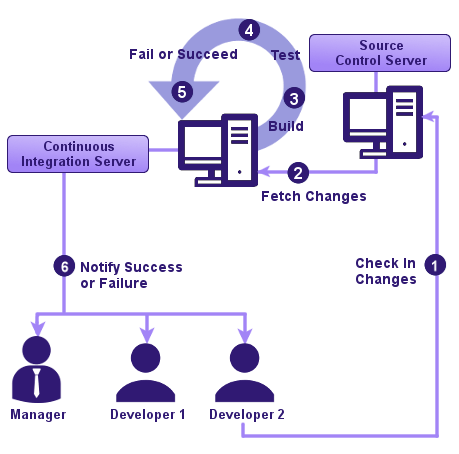

* Continuous integration helps by providing revertible

checkpoints.

* When using external libraries it is important not to make increments

that are so small as to be effectively merely testing the library

itself, unless there is some reason to believe that the library is buggy

or is not sufficiently feature-complete to serve all the needs of the

software under development.

https://en.wikipedia.org/wiki/Continuous_testing

https://en.wikipedia.org/wiki/Continuous_integration

https://en.wikipedia.org/wiki/Deployment_environment

When people are responsible for manually running tests, they get put-off and not run…

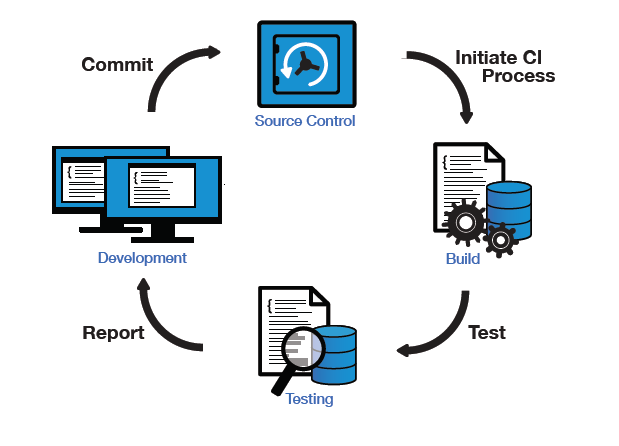

Continuous testing was originally proposed as a way of reducing waiting time for feedback to developers by introducing development environment-triggered tests as well as more traditional developer/tester-triggered tests.

Continuous testing is the process of executing automated tests as part of the software delivery pipeline to obtain immediate feedback on the business risks associated with a software release candidate.

For Continuous testing, the scope of testing extends from validating bottom-up requirements or user stories to assessing the system requirements associated with overarching business goals.

+++++++++++ Cahoot-19.1

https://mst.instructure.com/courses/58101/quizzes/57205

What must precede a statement to be executed in a doctest?

(forgot to show in lecture)

Notes:

To debug with pudb:

* https://documen.tician.de/pudb/starting.html

*

https://stackoverflow.com/questions/25182812/using-python-pudb-debugger-with-pytest

Put this at the top of your file:

import pudb; pu.db

And, run pytests or nose as:

pytest -s yourfile.py

+++++++++++ Cahoot-19.2

https://mst.instructure.com/courses/58101/quizzes/57206

What simple testing mechanism do pytest and nosetests employ?

(forgot to show in lecture)

Reading:

* https://docs.python.org/3/library/unittest.mock-examples.html

*

https://docs.python.org/3/library/unittest.mock.html?highlight=mock#module-unittest.mock

* https://realpython.com/python-mock-library/

* https://www.geeksforgeeks.org/python-testing-output-to-stdout/

Examples:

* 19-TestingFrameworks/testing_05_io.py

Notes:

* Rather than using mock, instead it is often better to handle

overwriting sys.sdin or sys.stdout directly, as we did before:

* 18-InputOutput.html

https://en.wikipedia.org/wiki/Mutation_testing

If you have working code, and supposedly working unit tests, and you

break your code using logic changes (not syntax errors), but your unit

tests do not break, then you unit tests were not good enough. Mutation

testing frameworks introduced constrained errors (types of logic errors,

flexibly) into code.